First of two JDM special issues on the Recognition Heurisitic

Subscribe to Decision Science News by Email (one email per week, easy unsubscribe)

Subscribe to Decision Science News by Email (one email per week, easy unsubscribe)

SPECIAL ISSUE: RECOGNITION PROCESSES IN INFERENTIAL DECISION MAKING

The journal Judgment and Decision Making today published a special issue on “Recognition processes in inferential decision making” edited by Julian N. Marewski, Rüdiger F. Pohl and Oliver Vitouch. The special issue turns out to be the first of two special issues, something the editors had not anticipated:

What was originally planned as one issue consisting of about 6 contributions turned into two volumes with about 20 submitted articles, some of which are still under review. All submissions were and are subject to Judgment and Decision Making’s peer review process, under the direction of the journal’s editor, Jonathan Baron, and us.

Here is how the editors describe the contents of the two special issues:

Let us briefly provide an overview of the contents of the two issues. The first issue presents 8 articles with a range of new mathematical analyses and theoretical developments on questions such as when the recognition heuristic will help people to make accurate inferences; as well as experimental and methodological work that tackles descriptive questions; for example, whether the recognition heuristic is a good model of consumer choice.

The forthcoming second issue strives to give an overview of the past, current, and likely future debates on the recognition heuristic, featuring comments on the debates by some of those authors who have been heavily involved, early experiments on the recognition heuristic that were run decades ago, but thus far never published, as well as new experimental tests of the recognition heuristic and alternative approaches. Finally, in the second issue, we will also provide a discussion of all papers in the two issues, and speculate about what we should possibly learn from these papers.

In allocating accepted articles to the two issues, we strove to strike a balance between the order of submission, the order of acceptance, and the topical fit of the papers. We apologize to those authors who feel disfavored by our attempts to establish such a balance; either because they preferred to see their contributions appear in the first, or alternatively, in the second issue.

Also surprising to Decision Science News was that although the topic was recognition processes in inference, all the articles address one particular rule of thumb, Goldstein & Gigerenzer’s recognition heuristic.

Goldstein, D. G. & Gigerenzer, G. (2002). Models of ecological rationality: The recognition heuristic. Psychological Review, 109, 75-90. [Download]

In other RH news, editor Marewski et al has a 2010 paper on the heuristic and editor Pohl also has a 2010 recognition heuristic paper.

CONTENTS OF THE FIRST SPECIAL ISSUE

Recognition-based judgments and decisions: Introduction to the special issue (Vol. 1), pp. 207-215 (html). Julian N. Marewski, Rüdiger F. Pohl and Oliver Vitouch

Why recognition is rational: Optimality results on single-variable decision rules, pp. 216-229 (html). Clintin P. Davis-Stober, Jason Dana and David V. Budescu

When less is more in the recognition heuristic, pp. 230-243 (html). Michael Smithson

The less-is-more effect: Predictions and tests, pp. 244-257 (html). Konstantinos V. Katsikopoulos

Less-is-more effects without the recognition heuristic, pp. 258-271 (html). C. Philip Beaman, Philip T. Smith, Caren A. Frosch and Rachel McCloy

Precise models deserve precise measures: A methodological dissection, pp. 272-284 (html). Benjamin E. Hilbig

Physiological arousal in processing recognition information: Ignoring or integrating cognitive cues?, pp. 285-299 (html). Guy Hochman, Shahar Ayal and Andreas Glöckner

Think or blink — is the recognition heuristic an intuitive strategy?, pp. 300-309 (html). Benjamin E. Hilbig, Sabine G. Scholl and Rüdiger F. Pohl

I like what I know: Is recognition a non-compensatory determiner of consumer choice?, pp. 310-325 (html). Onvara Oeusoonthornwattana and David R. Shanks

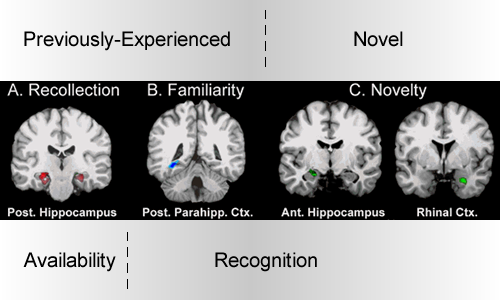

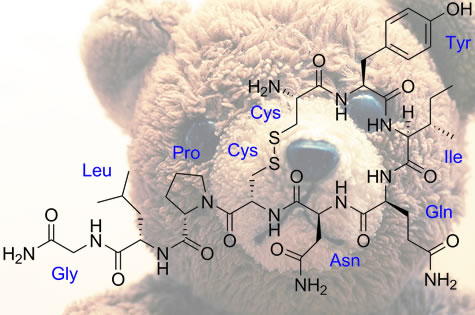

Photo adapted from S. M. Daselaar, M. S. Fleck, and R. Cabeza. (2006) Triple Dissociation in the Medial Temporal Lobes: Recollection, Familiarity, and Novelty. Journal of Neurophysiology 96, 1902-1911.