Do helmets make sports more violent?

Subscribe to Decision Science News by Email (one email per week, easy unsubscribe)

Subscribe to Decision Science News by Email (one email per week, easy unsubscribe)

MORAL HAZARDS AND WOMEN’S LACROSSE

This week Decision Science News and Peter McGraw’s blog team up for a joint post. — Enjoy

The New York Times just ran a piece called “A Case Against Helmets in Lacrosse“.

The hook of the article is that wearing helmets, which one would expect to make the game safer, could make the game more dangerous. Let’s review the quotes.

One:

It’s hard to absolutely prove, but what we’ve seen is that behavior can change when athletes feel more protected, especially when it comes to the head and helmets,” said Dr. Margot Putukian, Princeton’s director of athletic medicine services and chairwoman of > the U.S. Lacrosse safety committee. “They tend to put their bodies and heads in danger that they wouldn’t without the protection. And they aren’t as protected as they might think.”

Two:

Then again, other sports have spent the last several years realizing that safety equipment can bring dangers of its own. Checking in professional hockey became considerably more vicious with the adoption of helmets in the 1970s and ’80s, and football players felt so protected by their helmets and face masks that head-to-head collisions became commonplace at every age level.

Three (re: protective eyewear in Women’sLacrosse):

[Someone] said that after the move to make eyewear mandatory for the 2005 season, “It’s subconscious, but you see harder checking, and rougher play.”

Interesting topic! Let’s get Dan and Peter’s take on it:

DAN’S TAKE

This is an example of moral hazard, which the Wikipedia (at least during the last five minutes) defines as a situation in which “a party insulated from risk behaves differently than it would behave if it were fully exposed to the risk.” The top 10 Google Scholar papers with “moral hazard” in the title have over 10,000 collective citations. You hear a lot about moral hazards, for example, that people began driving more recklessly when seat belts were invented, at cocktail parties, coffee breaks, dinners with visiting speakers, and other moments in which people say what they really think.

Is it possible that requiring lacrosse players to wear helmets will increase risk to players? I doubt it. My grounds for skepticism? TCTBT: too cute to be true.

TCTBT (also “too clever to be true”) arguments survive not because they are correct, or supported by the best evidence, but because they are elegant, counter-intuitive, and make a person sound smart at a cocktail party. They fly well in Op-Ed pieces, keynote speeches, and other places where one is unlikely to be asked for evidence.

The smart thing to do when you hear a TCTBT explanation is to doubt it. Since repeating a clever explanation is clearly its own reward, how is one to say that the person offering it is well-informed or just trying to be conversationally brilliant?

While I feel that moral hazard is overhyped, I must admit that I’ve gone spelunking with a helmet and without a helmet, and yes, a person does let his head bump against the cave walls more often with the helmet on than off. However, the net impact to your head is less with the helmet on. This is what I would expect to happen in lacrosse. More helmets will bump against helmets, which seems more ‘violent’, but the net noggin impact will be less. And despite popular belief, they have rules in lacrosse, so the temptation to run helmet first into people may not even have an advantage.

Interestingly, the article says, “checking in professional hockey became considerably more vicious with the adoption of helmets in the 1970s and ’80s”. Again, correlation, is not causation. And is ‘viciousness’ measured in injuries?

I say let Moral Hazards join Prisoner’s Dilemmas, Tragedies of the Commons, and other cleverly constructed scenarios that don’t arise in proportion to the vast numbers of articles written about them. Pay more attention to boring things like default effects that exert large and demonstrable influences on hundreds of decisions in daily life.

That said, I know nothing about lacrosse except that I watched Pete play it once, so without further ado, I’ll turn the typing over to him. If you have empirical evidence of moral hazards we should be concerned about, please post in the comments.

PETE’S TAKE:

As someone with more than 30 years of (combined) lacrosse coaching and playing experience, my intuition leads me to believe that introducing helmets into the women’s game will increase the behaviors that put players at risk of injury. However, given that I regularly lecture on the fallibility of intuition, I also agree with Dan. I would like to see causal evidence before drawing a line in the sand. More important than just examining if helmets increase risky behavior, the analysis for deciding to institute helmets would need to balance the costs of the risky behavior against the benefits of the helmet. That is, the temporary bumps and bruises caused by more aggressive play may be worth incurring in order to reduce the risk of concussion. In that way, a thorough risk analysis would seem to be worth the price given the many thousands of women and girls who play the game.

The question of wearing a helmet creates a moral hazard in the game of lacrosse is complicated because the presence of the helmet would seem to influence more than just the offensive and defensive player’s behavior. The protection that a helmet provides could influence the way that the official monitors the game. Officials could more laxly (no pun intended) enforce the rules because they perceive the aggressive behavior as less risky, which in turn could further increase aggressiveness.

One last way to think about the helmet debate is to consider how much the debate is being colored by tradition (aka the status quo). And in this way, a useful question would be, if a new sport like lacrosse was created, would helmets be required given the emerging evidence about the risks of concussion?

Photo credit:http://www.flickr.com/photos/timailius/2472012607

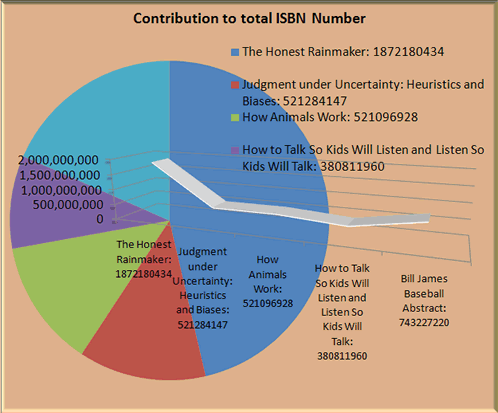

Judgment under Uncertainty: Heuristics and Biases

Judgment under Uncertainty: Heuristics and Biases