Filed in

Jobs

Subscribe

Subscribe to Decision Science News by Email (one email per week, easy unsubscribe)

SOCIAL SCIENCE RESEARCH ANALYST (PHD LEVEL)

The Social Security Administration’s Office of Retirement Policy (http://www.ssa.gov/retirementpolicy) is looking for a Social Science Research Analyst who will conduct and review complex research on the behavioral and psychological factors that can influence retirement behavior, work effort, and well-being.

Through written papers, oral presentations, and participation in multidisciplinary workgroups, your contributions will inform Agency executives and external policymakers as they work to improve the retirement security of our beneficiaries and the administration of our programs.

For our current vacancy announcement, open from August 1st to August 5th, visit

https://www.usajobs.gov/GetJob/ViewDetails/377087400

Filed in

Jobs

Subscribe

Subscribe to Decision Science News by Email (one email per week, easy unsubscribe)

SENIOR MANAGER OF BEHAVIORAL INSIGHT AND INTELLIGENCE

Job advertisement at Capita

DESCRIPTION

This is a senior role in a team which designs services for public and private sector clients which influence citizen and customer behaviours. Recent areas of focus include reducing reoffending, improving health behaviours, encouraging pro-environmental behaviours, prompting channel shift, increasing customer retention, and reducing fraud/error/debt. This is a senior role in a growing team, and offers the opportunity to shape how behavioural change is embedded in services which come into contact with 16 million people a day in the UK. Behaviour change is a critical component of Capita’s vision to achieve transformational change for our clients, while improving the quality of end-user experience. The Senior Manager of Behavioural Insight and Intelligence will be tasked over the longer term with supporting the Director of Behavioural Insight & Intelligence, in the development of a new strategic offer to internal and external clients of Capita plc: the integration of behavioural science, field testing and advanced analytics to continuously optimise outcomes. Masters degree or equivalent required

Specific RESPONSIBILITIES in 3 key areas include:

* Business development: Developing Capita’s transformational partnership offer to public and private sector clients, by identifying opportunities for behavioural science to add value to client solutions, and demonstrating resulting improvements across a range of outcomes.

* Building consensus: Working with a range of internal stakeholders, including service designers and solution developers, to understand user and system requirements and develop workable, impactful, behaviourally-led solutions.

* Operations: Ensuring Capita delivers behaviourally-led solutions in new and existing contracts, and enabling businesses to demonstrate the value of doing so

* Ensuring the Behavioural Insight and Intelligence team works in a fully integrated manner with associated teams within Group Marketing, including Service Design, Digital Innovation and Marketing Communications.

* Working to ensure that the various research and insight capabilities within Group Marketing (behavioural insight, analytics, qualitative research, quantitative survey methods) are coordinated to deliver compelling insight propositions to internal and external clients.

ESSENTIAL EXPERIENCE/SKILLS

* An expert in behavioural science, educated to at least Masters level in psychological science, social psychology, health psychology, decision science, or similar

* Experience in applied behavioural science, ambitious to be at the leading edge of applying their discipline to real world challenges

* A pro-active self-starter, keen to make services more efficient, effective and engaging for users

* Capable of engaging and influencing senior executives, colleagues and clients

* A strategic, blue sky thinker, who is committed to getting the detail right

* Able to work in a team and individually.

* Quantitative research skills

* Commercial awareness

DESIRED EXPERIENCE/SKILLS

* As the team grows, there may be the potential to take on managerial responsibilities, so experience in a managerial capacity is desired

Personal Attributes

* Enthusiastic and charismatic, capable of engaging and influencing senior executives, colleagues and clients

Filed in

Conferences

Subscribe

Subscribe to Decision Science News by Email (one email per week, easy unsubscribe)

ABSTRACT SUBMISSION DEADLINE AUGUST 15 2014

Conference on Digital Experimentation (CODE) 2014

About

The ability to rapidly deploy micro-level randomized experiments at population scale is, in our view, one of the most significant innovations in modern social science. As more and more social interactions, behaviors, decisions, opinions and transactions are digitized and mediated by online platforms, we can quickly answer nuanced causal questions about the role of social behavior in population-level outcomes such as health, voting, political mobilization, consumer demand, information sharing, product rating and opinion aggregation. When appropriately theorized and rigorously applied, randomized experiments are the gold standard of causal inference and a cornerstone of effective policy. But the scale and complexity of these experiments also create scientific and statistical challenges for design and inference. The purpose of the Conference on Digital Experimentation at MIT (CODE) is to bring together leading researchers conducting and analyzing large scale randomized experiments in digitally mediated social and economic environments, in various scientific disciplines including economics, computer science and sociology, in order to lay the foundation for ongoing relationships and to build a lasting multidisciplinary research community.

Speakers

Eric Anderson, Kellogg

Alessandro Aquisti, CMU

Susan Athey, Stanford

Eric Horvitz, Microsoft

Jeremy Howard, Khosla Ventures

Ron Kohavi, Microsoft

Karim R. Lakhani, Harvard

John Langford, Microsoft

David Lazer, Northeastern

Sendhil Mullainathan, Harvard

Claudia Perlich, Distillery

David Reiley, Google

Hal Varian, Google

Dan Wagner, Civis

Duncan Watts, Microsoft

Important Dates

Workshop: October 10-11, 2014

Abstract Submission Deadline: August 15, 2014

Notification to Authors: September 1, 2014

Final Abstract Submission: September 12, 2014

Early Registration Deadline: September 19, 2014

Onsite Registration: October 10, 2014

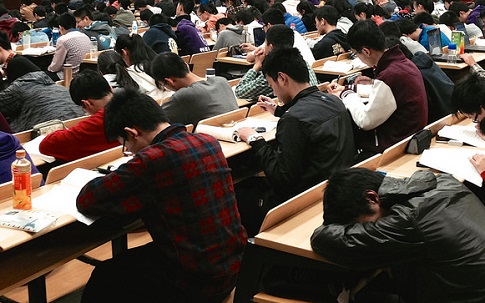

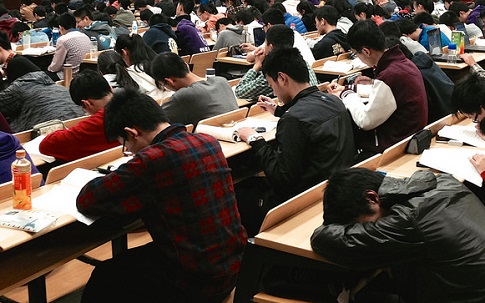

META-ANALYSIS SUGGESTS ACTIVE LEARNING BEATS LECTURING FOR TEACHING SCIENCE

With technology, old ways of doing things give way to simply better alternatives. We no longer need to pick up a phone to buy a plane ticket or hail a cab. We no longer need to carry cash around to pay for most things. Soon, students may not need to listen to traditional lectures to learn science and math because technology can make most STEM lessons participatory. But will the new model of instruction be as effective as traditional lecturing? In what its authors call “the largest and most comprehensive meta-analysis of undergraduate STEM education published to date”, the answer seems to be yes.

ABSTRACT

To test the hypothesis that lecturing maximizes learning and course performance, we metaanalyzed 225 studies that reported data on examination scores or failure rates when comparing student performance in undergraduate science, technology, engineer- ing, and mathematics (STEM) courses under traditional lecturing versus active learning. The effect sizes indicate that on average, student performance on examinations and concept inventories in- creased by 0.47 SDs under active learning ( n = 158 studies), and that the odds ratio for failing was 1.95 under traditional lecturing ( n = 67 studies). These results indicate that average examination scores improved by about 6% in active learning sections, and that students in classes with traditional lecturing were 1.5 times more likely to fail than were students in classes with active learning. Heterogeneity analyses indicated that both results hold across the STEM disciplines, that active learning increases scores on con- cept inventories more than on course examinations, and that ac- tive learning appears effective across all class sizes — although the greatest effects are in small ( n = 50) classes. Trim and fill analyses and fail-safe n calculations suggest that the results are not due to publication bias. The results also appear robust to variation in the methodological rigor of the included studies, based on the quality of controls over student quality and instructor identity. This is the largest and most comprehensive metaanalysis of undergraduate STEM education published to date. The results raise questions about the continued use of traditional lecturing as a control in research studies, and support active learning as the preferred, empirically validated teaching practice in regular classrooms.

REFERENCE

Freeman, Scott, Sarah L. Eddy, Miles McDonough, Michelle K. Smith, Nnadozie Okoroafor, Hannah Jordt, and Mary Pat Wenderoth. (2014). Active learning increases student performance in science, engineering, and mathematics, PNAS, 111 (23), 8410-8415; published ahead of print May 12, 2014, doi:10.1073/pnas.1319030111. [Download]

SOCIETY FOR JUDGMENT AND DECISION MAKING NEWSLETTER AND 2014 CONFERENCE SUBMISSION DEADLINE

The Society For Judgment and Decision Making is pleased to announce that the current newsletter is ready for download:

http://sjdm.org/newsletters/

Enjoy!

Dan Goldstein

SJDM newsletter editor

P.S. Don’t forget the SJDM conference submission deadline is June 30, 2014. The conference will be held November 21-24, 2014 in Long Beach, California. The call for abstracts is available at: http://www.sjdm.org/programs/2014-cfp.html

Filed in

Jobs

Subscribe

Subscribe to Decision Science News by Email (one email per week, easy unsubscribe)

DO BEHAVIORAL ECONOMICS IN THE US GOVERNMENT

The U.S. Social and Behavioral Science Team (SBST) is currently seeking exceptionally qualified individuals to serve as Fellows.

The SBST helps federal agencies increase the efficiency and efficacy of their programs and policies, by harnessing research methods and findings from the social and behavioral sciences. The team works closely with agencies across the federal government, thinking creatively about how to translate social and behavioral science insights into concrete interventions that are likely to improve federal outcomes and designing rigorous field trials to test the impact of these recommendations.

See the SBST Fellow Solicitation for 2014 for duties and requirements, and details on how to apply for this unique opportunity.

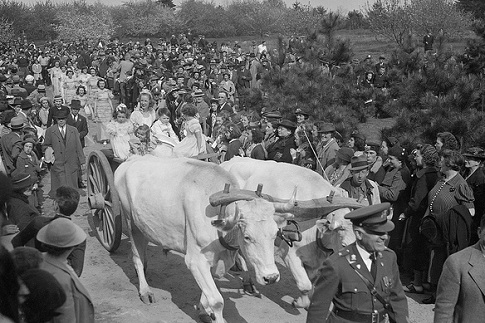

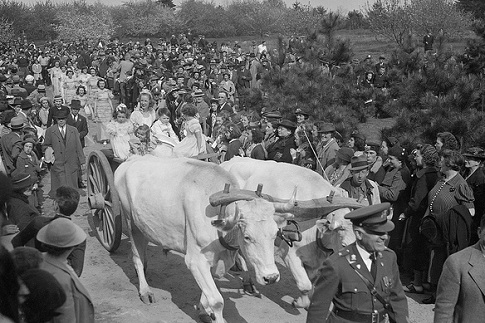

THERE IS WISDOM WITHIN

How many crowd members are needed to accurately estimate the weight of these oxen?

It has come to our attention that a number of papers, all making similar points have been produced at about the same time. Here they are:

PAPERS ON SMALLER, SMARTER CROWDS

Budescu, D. V., & Chen, E. (2014). Identifying Expertise to Extract the Wisdom of Crowds. Management Science.

Davis-Stober, C. P., Budescu, D. V., Dana, J., & Broomell, S. B. (2014). When Is a Crowd Wise? Decision.

Fifić, M., & Gigerenzer, G. (2014). Are two interviewers better than one? Journal of Business Research, 67(8), 1771-1779.

Goldstein, Daniel G., R. Preston McAfee, & Siddharth Suri. (in press) The Wisdom of Smaller, Smarter Crowds. Proceedings of the 15th ACM Conference on Electronic Commerce (EC’14).

Jose, V. R. R., Grushka-Cockayne, Y., & Lichtendahl Jr, K. C. (2014). Trimmed opinion pools and the crowd’s calibration problem. Management Science.

Mannes, A. E., Soll, J. B., & Larrick, R. P. (in press). The wisdom of select crowds. Journal of Personality and Social Psychology.

This post will no doubt cause people to come forth with yet other papers, which we will include if they fit. Bring them on.

SELF-CONSTRUCTED STREAKS IN GAMBLING SUCCESS AND FAILURE

We came across this writeup (excuse the click-baity title) of a new paper by Juemin Xu and Nigel Harvey called “Carry on winning: The gamblers’ fallacy creates hot hand effects in online gambling” and found it to be fascinating. It’s one of those things you read and think “how could no one have thought of this before”?

ABSTRACT

People suffering from the hot-hand fallacy unreasonably expect winning streaks to continue whereas those suffering from the gamblers’ fallacy unreasonably expect losing streaks to reverse. We took 565,915 sports bets made by 776 online gamblers in 2010 and analyzed all winning and losing streaks up to a maximum length of six. People who won were more likely to win again (apparently because they chose safer odds than before) whereas those who lost were more likely to lose again (apparently because they chose riskier odds than before). However, selection of safer odds after winning and riskier ones after losing indicates that online sports gamblers expected their luck to reverse: they suffered from the gamblers’ fallacy. By believing in the gamblers’ fallacy, they created their own hot hands.

REFERENCE

Xu, Juemin and Nigel Harvey. (2014). Carry on winning: The gamblers’ fallacy creates hot hand effects in online gambling. Cognition, Volume 131, Issue 2, May 2014, Pages 173–180. [Full text and PDF free at the publisher’s site]

Photo credit: https://www.flickr.com/photos/jesscross/3169240519/

STARTING TODAY

Preston McAfee, former decade-long editor of the American Economic Review, former Caltech professor, and all around micro-economist extraordinaire, starts today as Chief Economist of Microsoft.

[TechNet] Microsoft hires Preston McAfee as chief economist

We at Decision Science News are excited to be publishing with Preston again, picking up on the research we did with Sid Suri and him back in the Yahoo Research days:

- Goldstein, Daniel G., R. Preston McAfee, & Siddharth Suri. (in press) The Wisdom of Smaller, Smarter Crowds. Proceedings of the 15th ACM Conference on Electronic Commerce (EC’14)

- Goldstein, Daniel G., R. Preston McAfee, & Siddharth Suri. (2013). The cost of annoying ads. Proceedings of the 23rd International World Wide Web Conference (WWW 2013).

- Goldstein, Daniel G., R. Preston McAfee, & Siddharth Suri. (2012). Improving the effectiveness of time-based display advertising. Proceedings of the 13th ACM Conference on Electronic Commerce (EC’12) [Winner: Best Paper Award]

- Goldstein, Daniel G., R. Preston McAfee, & Siddharth Suri. (2011). The effects of exposure time on memory of display advertisements. Proceedings of the 12th ACM Conference on Electronic Commerce (EC’11)

After Yahoo, Sid and I went to Micrsoft and Preston went to Google. We’re all very happy to be reunited!

MECHANICAL VERSUS CLINICAL DATA COMBINATION IN SELECTION AND ADMISSIONS DECISIONS: A META-ANALYSIS

The pink plastic alligator at Erasmus University Rotterdam says “Interview-based impressions belong in the trash can behind me.”

Is there something you’ve learned in your job that you wish you could tell everyone? We have something that’s well known for decades by decision-making researchers, and all but unknown in the outside world.

Here’s the deal. When hiring or making admissions decisions, impressions of a person from an interview are close to worthless. Hire on the most objective data you have. Even when people try to combine their impressions with data, they make worse decisions than by just following the data alone.

Don’t feel swayed by an interview. It’s not fair to the other candidates who are better on paper. They will most likely be better in practice.

Please see:

* This paper by Kuncel, Klieger, Connelly, and Ones: Mechanical versus clinical data combination in selection and admissions decisions: A meta-analysis.

ABSTRACT

In employee selection and academic admission decisions, holistic (clinical) data combination methods continue to be relied upon and preferred by practitioners in our field. This meta-analysis examined and compared the relative predictive power of mechanical methods versus holistic methods in predicting multiple work (advancement, supervisory ratings of performance, and training performance) and academic (grade point average) criteria. There was consistent and substantial loss of validity when data were combined holistically—even by experts who are knowledgeable about the jobs and organizations in question—across multiple criteria in work and academic settings. In predicting job performance, the difference between the validity of mechanical and holistic data combination methods translated into an improvement in prediction of more than 50%. Implications for evidence-based practice are discussed.

REFERENCE

Kuncel, N. R., Klieger, D. M., Connelly, B. S., and Ones, D. S. (2013). Mechanical versus clinical data combination in selection and admissions decisions: A meta-analysis. Journal of Applied Psychology, 98(6), 1060

* This paper by Highhouse Stubborn reliance on intuition and subjectivity in employee selection. Industrial and Organizational Psychology, 1(3), 333-342.

ABSTRACT

The focus of this article is on implicit beliefs that inhibit adoption of selection decision aids (e.g., paper-and-pencil tests, structured interviews, mechanical combination of predictors). Understanding these beliefs is just as important as understanding organizational constraints to the adoption of selection technologies and may be more useful for informing the design of successful interventions. One of these is the implicit belief that it is theoretically possible to achieve near-perfect precision in predicting performance on the job. That is, people have an inherent resistance to analytical approaches to selection because they fail to view selection as probabilistic and subject to error. Another is the implicit belief that prediction of human behavior is improved through experience. This myth of expertise results in an over-reliance on intuition and a reluctance to undermine one’s own credibility by using a selection decision aid.

REFERENCE

Highhouse, S. (2008). Stubborn reliance on intuition and subjectivity in employee selection. Industrial and Organizational Psychology, 1(3), 333-342.

* This paper by Highhouse and Kostek. Holistic assessment for selection and placement

ABSTRACT

Holism in assessment is a school of thought or belief system rather than a specific technique. It is based on the notion that assessment of future success requires taking into account the whole person. In its strongest form, individual test scores or measurement ratings are subordinate to expert diagnoses. Traditional standardized tests are seen as providing only limited snapshots of a person, and expert intuition is viewed as the only way to understand how attributes interact to create a complex whole. Expert intuition is used not only to gather information but also to properly execute data combination. Under the holism school, an expert combination of cues qualifies as a method or process of measurement. The holistic assessor views the assessment of personality and ability as an ideographic enterprise, wherein the uniqueness of the individual is emphasized and nomothetic generalizations are downplayed (see Allport, 1962). This belief system has been widely adopted in college admissions and is implicitly held by employers who rely exclusively on traditional employment interviews to make hiring decisions. Milder forms of holistic belief systems are also held by a sizable minority of organizational psychologists—ones who conduct managerial, executive, or special-operation assessments. In this chapter, the roots of holistic assessment for selection and placement decisions are reviewed and the applications of holistic assessment in college admissions and employee selection are discussed. Evidence and controversy surrounding holistic practices are examined, and the assumptions of the holistic school are evaluated. That the use of more standardized procedures over less standardized ones invariably enhances the scientific integrity of the assessment process is a conclusion of the chapter.

REFERENCE

Highhouse, Scott and Kostek, John A. (2013). Holistic assessment for selection and placement. Chapter in: APA handbook of testing and assessment in psychology, Vol. 1: Test theory and testing and assessment in industrial and organizational psychology. http://psycnet.apa.org/index.cfm?fa=search.displayRecord&UID=2012-22485-031

Feel free to post other references in the comments.