Judgment and Decision Making, Vol. 16, No. 4, July 2021, pp. 823-843

No effects of synchronicity in online social dilemma experiments: A registered report

Anthony M. Evans*

Christoph Kogler#

Willem W. A. Sleegers$

|

Abstract:

Online experiments have become a valuable research tool for researchers

interested in the processes underlying cooperation. Typically, online

experiments are asynchronous, participants complete an experiment

individually and are matched with partners after data collection has been

completed. We conducted a registered report to compare asynchronous and

synchronous designs, where participants interact and receive feedback in

real-time. We investigated how two features of synchronous designs,

pre-decision matching and immediate feedback, influence cooperation in the

prisoners dilemma. We hypothesized that 1) pre-decision matching

(assigning participants to specific interaction partners before they make

decisions) would lead to decreased social distance and increased

cooperation; 2) immediate feedback would reduce feelings of aversive

uncertainty and lead to increased cooperation; and 3) individuals with

prosocial Social Value Orientations would be more sensitive to the

differences between synchronous and asynchronous designs. We found no

support for these hypotheses. In our study (N = 1,238),

pre-decision matching and immediate feedback had no significant effects on

cooperative behavior or perceptions of the interaction; and their effects

on cooperation were not significantly moderated by Social Value

Orientation. The present results suggest that synchronous designs have

little effect on cooperation in online social dilemma experiments.

Keywords: social dilemmas; cooperation; uncertainty; delayed feedback

1 Introduction

Online experiments have become a valuable research tool for psychologists

and economists interested in human cooperation (Arechar et al., 2018;

Horton et al., 2011). Online experiments offer potential advantages, such

as large sample sizes (Hauser et al., 2016) and access to diverse

participants (Nishi et al., 2017); and increase the feasibility of multi-

and cross-national studies (Dorrough & Glöckner, 2019; Romano et al.,

2017). When social dilemmas experiments are conducted online, researchers

often use asynchronous designs, where participants complete an experiment

individually and are matched with partners after data collection has been

completed. Asynchronous experiments differ from synchronous experiments in

two important ways: First, participants are not matched to a specific

partner before decisions are made. Second, participants do not receive

immediate feedback on the consequences of their choices. The present

research tests whether pre-decision matching and immediate feedback

influence cooperation, and whether the effects of these design features

are moderated by individual differences in prosocial preferences. The

proposed research contributes to our understanding of how common research

methods influence psychological processes and behavior in online social

dilemmas experiments.

1.1 Conducting Social Dilemmas Experiments Online

Social dilemmas are situations where individuals must make a choice between

pursuing self-interest and the collective good (Dawes, 1980), and the

study of social dilemmas is an important point of intersection for

researchers in the behavioral sciences (Van Lange et al., 2013). As the

use of online samples in psychological research has grown (Birnbaum, 2000;

Buhrmester et al., 2011; Gosling et al., 2004), many social dilemmas

researchers have begun to rely on online participant pools such as

Amazon’s Mechanical Turk (mTurk) and Prolific Academic.

Importantly, online social dilemmas experiments also produce valid data:

Amir et al. (2012) found that online behavior in typical economic games

(e.g., the public good game, the trust game, the ultimatum game, and the

dictator game) resembles behavior observed in laboratory experiments

(e.g., proposers in the ultimatum game reject unfair offers, and

reciprocity in the trust game is proportional to the initial level of

trust). Arechar et al. (2018) also demonstrated that online participants

can be used to study behavior in repeated games: as in laboratory studies,

cooperation deteriorated in later rounds of a repeated interaction, but

was bolstered by introducing the possibility of peer punishment.

There are also potential limitations to online experiments. Although data

quality is generally adequate (Buhrmester et al., 2011), experimental

manipulations may be affected by the non-naivete and prior experience of

online participants (Chandler et al., 2014). Illustrating this problem,

Rand et al. (2014) found that manipulations of time pressure in social

dilemmas became progressively less effective over time, in part because

more participants developed prior experience with experimental games.

Similarly, Chandler et al. (2015) found that effect sizes in a range of

psychological tasks became less pronounced among experienced participants.

However, the effects of participant sophistication can be attenuated by

presenting participants with new variants of existing paradigms (Rand et

al., 2014).

1.2 Synchronicity in Online

Experiments

Although synchronous experiments, where participants have live interactions

and receive feedback in real-time, are possible using software programs

such as oTree (Chen et al., 2016) and classEx (Arechar et al., 2018), most

online experiments are asynchronous. To illustrate this point, we surveyed

articles published in the Judgment and Decision Making journal over an

eight-year period (2013–2020): There were 40 published studies using

online samples to study behavior in social dilemmas, and all of these

studies were conducted using asynchronous methods. No studies that we know

of have systematically compared behavior in synchronous and asynchronous

online experiments. In this section, we review research suggesting that

behavior may be affected by two defining features of fully synchronous

experiments, pre-decision matching and immediate feedback.

1.2.1 Pre-decision Versus Post-decision Matching

In synchronous experiments, participants are assigned to specific partners

before any decisions are made; in asynchronous experiments, partner

assignment happens only after data collection is complete. We predicted

that pre-decision matching would reduce the perceived social distance

between participants. This prediction is motivated by research on

charitable giving and the identifiable victim effect: people are more

willing to help a single victim compared to a group of statistical victims

(Kogut & Ritov, 2005a, 2005b). Critically, this effect does not depend on

the specific characteristics of the individual victim (Kogut & Ritov,

2005a), and it can even occur when the recipient of help remains

unidentified (Lee & Feeley, 2016; Small & Loewenstein, 2003). Arguably,

people experience a stronger emotional connection to the recipient of help

when the recipient is identified as any specific person (Small &

Loewenstein, 2003; Small et al., 2007). In social dilemmas, we anticipated

that assigning participants to interact with specific partners before they

make decisions would reduce perceived social distance.

Additionally, other work suggests that pre-decision matching may reduce

distance by leading people to interpret their interactions as social

exchanges, rather than abstract reasoning problems: Research on the

strategy method, which requires participants to make conditional decisions

for all possible situations (Brandts & Charness, 2011), suggests that

people sometimes become less trusting (Murphy et al., 2006) and less

trustworthy (Casari & Cason, 2009) when social decisions are presented

abstractly. Subtle social cues, such as referring to other players as

partners versus opponents (McCabe et al., 2003) or describing an

interaction as a community game versus Wall Street game (Liberman et al.,

2004), can encourage prosocial behavior by making the norm of reciprocity

salient. In the same way, pre-decision matching may change how

participants construe their decisions, leading them to feel closer and

more interconnected with their interaction partners.

We expected that pre-decision matching would reduce social distance, and

that reduced social distance, in turn, would result in increased

cooperation. People are generally more prosocial towards those they feel

close to (Jones & Rachlin, 2006, 2009), and this increase in cooperation

can even occur when proximity is based on arbitrary procedures, such as

the minimal group paradigm (Balliet et al., 2014; Goette et al., 2006). In

summary, our first prediction was that pre-decision matching would reduce

social distance and, in turn, increase cooperation among strangers.

1.2.2 Immediate Versus Delayed Feedback

In synchronous experiments, participants immediately receive feedback on

the outcomes of their decisions; in asynchronous online experiments, there

is a delay, sometimes hours or days, before participants learn about

interaction partners’ decisions and their final payoffs for the

experiment. Delaying feedback adds a temporal dimension to the social

dilemma, and previous studies have found that cooperation is more

difficult to sustain when potential outcomes are projected into the future

(Joireman et al., 2004; Kortenkamp & Moore, 2006).1

We predicted that immediate feedback would increase cooperation by reducing

feelings of aversive uncertainty related to the fear of exploitation.

Undesirable outcomes, such as losing money or receiving an electric shock,

are perceived as worse when they are projected into the future

(Loewenstein, 1987). For risky decisions, delayed feedback affects the

subjective perception of the likelihood and impact of negative outcomes,

and thus makes people more likely to select relatively safe options

(Kogler et al., 2016; Muehlbacher et al., 2012). In social dilemmas,

defection may be seen as “safer” than cooperation because choosing

defection eliminates the possibility of the worst possible outcome (i.e.,

the sucker’s payoff). Therefore, our second hypothesis was that immediate

feedback would reduce feelings of aversive uncertainty and lead to

increased cooperation.

1.2.3 Synchronicity and Social Value Orientation

In addition to considering the group-level effects of synchronous

experiments, we also investigated whether the effects of synchronous

designs were moderated by individual differences in Social Value

Orientation (SVO) (Murphy & Ackermann, 2014; Van Lange, 1999). We

hypothesized that prosocial individuals (i.e., those with stronger

preferences to maximize joint outcomes or outcome equality) would be more

likely to be affected by the distinction between synchronous and

asynchronous experiments. Compared to fully self-focused individualists,

prosocials are more sensitive to situational cues (Bogaert et al., 2008)

and are more likely to adapt their expectations and behavior based on

context (Van Lange, 1999). In other words, prosocials cooperate in social

dilemmas when it can be justified by the constraints of the situation;

individualists, on the other hand, tend to be unconditionally

self-interested (Epstein et al., 2016; Yamagishi et al., 2014). Thus, we

expected that pre-decision matching and immediate feedback would have

stronger (positive) effects on cooperation for individuals with stronger

prosocial preferences.

1.3 Overview of Hypotheses

We conducted an experiment comparing behavior in synchronous versus

asynchronous dilemmas. We examined how two features of synchronous

experiments, pre-decision matching and immediate feedback, influenced

cooperation. We predicted that pre-decision matching and immediate

feedback would both increase cooperation. More precisely, we expected that

pre-decision matching would reduce feelings of social distance and that

immediate feedback would reduce feelings of aversive uncertainty. In

addition to considering the group-level effects of synchronous

experiments, we also tested whether individual differences in SVO

moderated their effects on behavior. We hypothesized that pre-decision

matching and immediate feedback would have stronger positive effects on

the behavior of individuals with prosocial preferences. Our Stage 1 report

can be viewed at

https://osf.io/7qnej/?view_only=d79a1ba27cd34a01b0d14fa0b8cb03a2.

2 Method

2.1 Participants

Power Analysis.

We conducted power analyses using G*Power 3.1 (Faul et al., 2009) to

identify the number of participants needed to detect a small effect

(ϕ =.1) using a Chi Square test with 3 groups, 80%

power, and α = .05: minimum N = 964. We adjusted this

estimate based on an expected dropout rate of roughly 10%.2

Our total planned sample size was N = 1,200.

We recruited 1238 participants from Prolific Academic. We ended up with

slightly more participants than expected because Prolific sometimes

classified participants as “timed out” while they were still completing

the experiment. The average age was 26.64 years (SD = 9.46); and there

were 648 men, 383 women, 12 non-binary participants, and 195 participants

who did not report genders.

Recruitment.

We conducted twelve experimental sessions with 100 available spaces per

session. Sessions were launched on weekdays at 2:00PM CEST. The first

three sessions were conducted in November 2020, and the remaining nine

sessions were conducted in January 2021. Participants were prevented from

completing the study more than once using Prolific’s “previous study”

filter. Participants received a show up payment of £1.50 each, and those

who finished the experiment also received bonus payments based on their

choices (£0.50 to 4.00 per person).

2.2 Materials and Procedure

The experiment was administered using oTree (Chen et al., 2016). The

experiment did not involve any deception.

Prisoners Dilemma.

Participants made decisions in a binary choice prisoners dilemma. Each

participant was assigned to a partner and chose Keep or Transfer: If both

players choose Keep, then they received 100 points each; If both players

chose Transfer, then they received 200 points each; If one player chose

Keep and the other chose Transfer, then they received 300 points and 0

points, respectively. After reading the instructions, participants were

presented with four comprehension questions (Example: “If both you and the

other participant choose “Transfer”, how many points will you receive?”).

The majority of participants (75%) answered all four questions correctly;

and rates of accuracy did not differ across experimental conditions:

asynchronous = 75%; partially synchronous = 75%; synchronous = 73%

(χ 2(2) = 0.55, p = .76). Our primary analyses

included all participants. Following our pre-registration, we also

conducted supplemental analyses using only data from participants who

answered all four questions correctly.

The full instructions and experiment materials are reported in the

Appendix.

Proposed Mediators.

We hypothesized that social distance and aversive uncertainty would mediate

the effects of pre-decision matching and immediate feedback (respectively)

on cooperation. The items measuring these constructs are reported in Table

1a. We randomized whether participants responded to the proposed mediators

immediately before or immediately after they made decisions in the

prisoners dilemma, and this randomization occurred at the session level.

Post-decision Measures.

After cooperation decisions were made, participants were presented with a

series of questions before they received feedback on the outcome of the

interaction. We measured expectations of cooperation in their interaction

partners; their confidence in these expectations; and feelings of

anticipated satisfaction and regret. These items are reported in Table 1b.

After completing these post-decision measures, participants then completed

the 6-item slider measure of SVO (Murphy & Ackermann, 2014). In this

measure, each participant was asked to make a series of hypothetical

allocation decisions, where they decided how many points to share with an

anonymous interaction partner.

Post-feedback Measures.

After participants learned about their partners’ choices and the outcome of

the interaction (including information about their bonus payment), they

responded to a series of items before concluding the experiment. To

measure participants’ reactions to feedback, we asked participants how

satisfied they were with the outcome of the interaction and whether they

regretted their choice in the interaction. Participants also played a

mini-dictator game, where they decided whether to share additional money

with the other participant from the prisoners dilemma. We also measured

how much participants enjoyed the experiment, whether they believed that

they were interacting with a real person in the interaction, the perceived

fairness of the interaction, and basic demographics questions. The

complete measures are included in Table 1c.

| Table 1: Summary of hypothesized mediators, post-decision measures, and

post-feedback measures. |

| A. Hypothesized mediators | M (SD) |

Social distance | How close do you feel to the other participant in the game?

(Reverse-scored) | 0 = not at all

10 = very close | 2.56 (2.63) |

| | How much do you have in common with the other participant in the game?

(Reverse-scored) | 0 = nothing at all

10 = a lot in common | 3.13 (2.51) |

Aversive uncertainty | How nervous are you to learn about the outcome of the game? | 0 = not nervous at all

10 = very nervous | 3.69 (3.02) |

| | How worried are you to learn about the outcome of the game? | 0 = not worried at all

10 = very worried | 3.11 (2.81) |

| B. Post decision measures | M (SD) |

Expected cooperation | On a scale from 0 (will definitely choose Keep) to 10 (will definitely

choose Transfer), how likely is it that the other participant will choose

Transfer? | 0 = definitely choose keep

10 = definitely choose transfer | 4.89 (2.26) |

Confidence in expectations | How confident are you in your expectation of the other participant’s behavior? | 0 = not at all confident

10 = very confident | 4.71 (2.45) |

Anticipated satisfaction | How satisfied do you expect to feel about the outcome of the game? | −5 = not at all satisfied

+5 = extremely satisfied | 1.09 (1.90) |

Anticipated regret | On the previous page, you chose KEEP/TRANSFER. How much regret do you

expect to feel about the choice you made? | −5 = no regret at all

+5 = a lot of regret | −0.69 (2.78) |

| C. Post-feedback measures | M (SD) |

Experienced satisfaction | How satisfied are you with the outcome of the game? | −5 = not at all satisfied

+5 = extremely satisfied | 2.17 (3.51) |

Experienced regret | How much regret do you feel about the choice you made in the game? | −5 = no regret at all

+5 = a lot of regret | −2.24 (3.43) |

Mini-dictator game | You now have a final opportunity to earn additional points.

These points will be added to your bonus payment. Your decision will also

affect the bonus payment of the other participant – the same person you

interacted with in the previous part of this study.

Left = 50 points for you / 0 points for the other participant

Right = 25 points for you / 25 points for the other participant | Left or Right (binary choice) | 0.66 (chose Right) |

Enjoyment of experiment | To what extent did you enjoy participating in this experiment? | 0 = not at all

10 = very much | 7.99 (1.95) |

| | To what extent did you find this experiment interesting? | 0 = not at all

10 = very much | 8.20 (1.82) |

Perceived fairness | The rules of the decision-making game were fair. | 0 = strongly disagree

10 = strongly agree | 7.92 (2.06) |

| | The procedure of the decision-making game was fair. | 0 = strongly disagree

10 = strongly agree | 7.91 (2.20) |

Perceived realism | In this study, to what extent did you feel like you were interacting with a

real person? | 0 = not at all

10 = very much | 5.47 (2.89) |

Demographics | Age, gender, English proficiency, income, location, political orientation |

Experimental Conditions.

Participants were assigned to partners based on the time at which they

begin the experiment. There were three experimental conditions (summarized

in Table 2): asynchronous (post-decision matching with delayed feedback);

partially synchronous (pre-decision matching with delayed feedback); and

fully synchronous (pre-decision matching with immediate feedback).

Experimental conditions were assigned at the level of session, with four

sessions for each condition.

| Table 2: Summary of experimental conditions and descriptive statistics |

| | Partner Matching | Feedback | N | Matching rate | Follow up rate |

| Asynchronous | Post-decision | Delayed | 404 | NA | 0.81 |

| Partially synchronous | Pre-decision | Delayed | 418 | 1.00 | 0.78 |

| Fully synchronous | Pre-decision | Immediate | 409 | 0.96 | NA |

Note: “Matching rate” refers to the proportion of participants who were

successfully assigned to partners during the first part of the experiment.

“Follow up rate” refers to the proportions of participants that completed

the second part of the study (which was administered one week after the first part). |

Pre- vs post-decision matching.

Participants in the pre-decision matching conditions were assigned to

partners before they made decisions in the prisoners dilemma. If no

partner was immediately available, then participants were redirected to a

waiting screen where they were asked to wait for a period of up to five

minutes. If no partner could be located during that time frame, then

participants had the option to continue waiting for another five minutes

or to terminate the experiment, in which case they received the show up

payment (but they did not receive any bonus payment). Across sessions,

almost all participants (0.98) were successfully matched with partners.

The average waiting time was 9.66 seconds (SD = 66.02).

In the post-decision matching condition, participants were assigned to

partners after they had already made decisions in the prisoners dilemma.

Immediate vs delayed feedback.

In the immediate feedback condition, participants learned about the outcome

of the prisoners dilemma as soon as both players made their decisions and

answered the post-decision questions. In the delayed feedback conditions,

participants were contacted via Prolific messages one week after the

initial experiment session and invited to complete the study. A majority

of participants (80%) from the delayed feedback conditions completed the

second part of the study.

2.3 Deviations from pre-registered protocol

There were five ways in which our study deviated from our pre-registered

protocol:

-

We recruited participants from Prolific Academic rather than MTurk.

We made this switch given growing concerns about the presence of bots and

deteriorating data quality on MTurk (Chmielewski & Kucker, 2020).

- We reduced participant payments from $3 to £1.50. Given the duration

of our study (typical completion time of 5–8 minutes), $3 would have been

an unusually high rate-of-payment on the Prolific platform (typical

studies pay £0.10 to 0.20 per minute).

- Our original plan was to administer multiple sessions per day. We

decided to run only one session per day during the peak usage hour on

Prolific

(https://researcher-help.prolific.co/hc/en-gb/articles/360011657739-When-are-Prolific-participants-most-active-).

The purpose of this change was to increase the number of available

participants, and to maximize the chance that participants would be

successfully matched in real-time.

- We randomized whether mediation questions were presented before (or

after) the prisoners dilemma. Originally, we planned to randomize this

factor at the level of dyad. Instead, we decided to implement this

randomization at the session level.

- After we collected data for sessions 1–3 of the study, a participant

informed us about a potential problem. Some participants were able to view

their condition assignments in the Internet browser’s URL bar. We paused

further data collection until we were able to fix this issue. Note that

excluding participants from the first three sessions did not change any of

our results.

3 Results

3.1 Primary Analyses

Our primary analyses focused on the effects of pre-decision matching and

immediate feedback on cooperation, social distance, and feelings of

aversive uncertainty. Descriptive statistics for these variables by

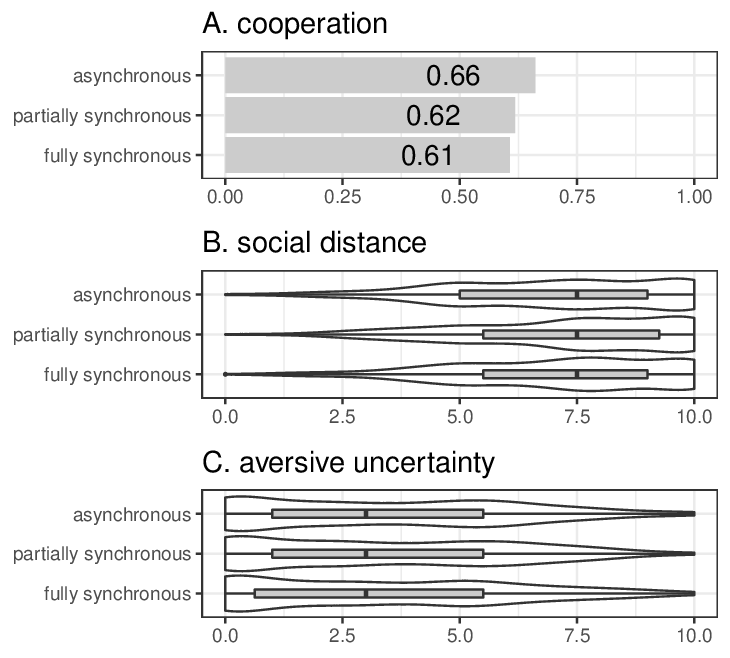

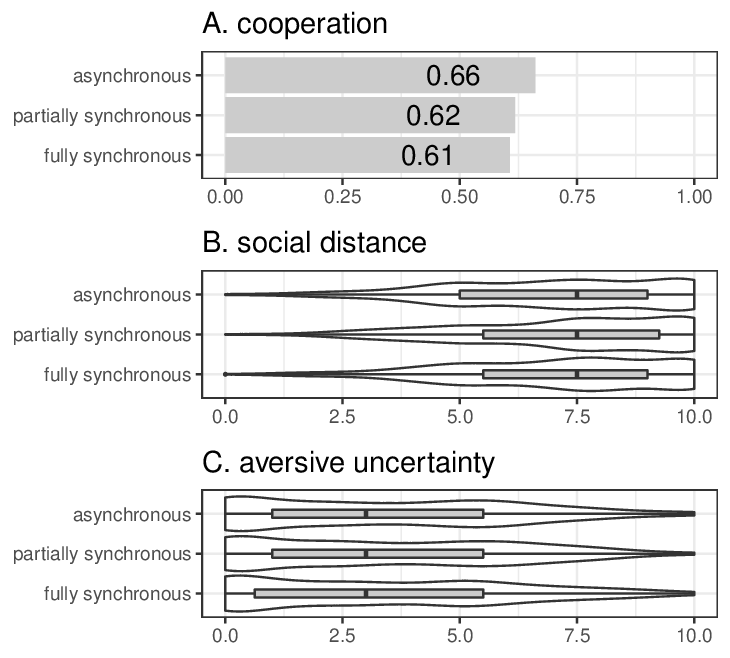

condition are shown in Figure 1. Following our analysis plan, we conducted

two-tailed tests with α = .05.

| Figure 1: Levels of cooperation (A), social distance (B), and aversive

uncertainty (C) by experimental condition. Plots B and C use violin plots

and box plots to show distributions of responses within each condition.

Bold lines indicate median responses, and box widths indicate values that

lie within the first and third quartiles. |

Pre-decision matching.

Our first prediction was that pre-decision matching would increase

cooperation via reduced social distance. First, we used a logistic

regression to compare the rates of cooperation in the asynchronous and

partially synchronous conditions (-.5 = asynchronous; +.5 = partially

synchronous). Pre-decision matching had no significant effect on

cooperation (b = −0.19, SE = 0.15, p = .20). Then, we compared the levels

of social distance between the two conditions. Pre-decision matching did

not significantly affect social distance (b = 0.28, SE = 0.16, p =.084).

Social distance was, however, negatively associated with cooperation (b =

−0.08, SE = 0.03, p = .012).

Immediate feedback.

Our second hypothesis was that immediate feedback would reduce feelings of

uncertainty and lead to increased cooperation. We compared the rates of

cooperation in partially synchronous and fully synchronous conditions (-.5

= partially synchronous; +.5 = fully synchronous). Immediate feedback had

no significant effect on cooperation (b = −0.05, SE = 0.15, p = .75).

Then, we compared the levels of aversive uncertainty across conditions.

Immediate feedback had no significant effect on aversive uncertainty (b =

−0.04, SE = 0.20, p = .83). Aversive uncertainty was not significantly

associated with cooperation (b = 0.05, SE = 0.03, p = .052).

Social Value Orientation.

Our third hypothesis was that the effects of synchronous experiments would

be moderated by individual differences in SVO. More specifically, we

expected that pre-decision matching and immediate feedback would have

stronger effects on cooperation for individuals with stronger prosocial

orientations. To test this prediction, we estimated a series of regression

models predicting cooperation, social distance, and aversive uncertainty.

Each model included the following variables as predictors: pre-decision

(vs. post-decision) matching; immediate (vs. delayed) feedback; Social

Value Orientation angle (mean centered); and two SVO by experimental

condition interaction terms. The results are reported in Table 3.

Reassuringly, SVO was positively correlated with cooperation, but we found

no support for the predicted SVO-by-condition interactions.

| Table 3: The interactive effects of Social Value Orientation, pre-decision matching,

and immediate feedback. |

| | Cooperation | Social distance | Aversive uncertainty |

| | b (SE) | p | b (SE) | p | b (SE) | p |

| Constant | 0.57 (0.08) | .29 | 7.08 (0.08) | <.001 | 3.42 (0.10) | <.001 |

| SVO | 0.11 (0.02) | <.001 | −0.03 (0.02) | .047 | −0.01 (0.02) | .75 |

| Pre-decision matching | −0.17 (0.15) | .27 | 0.28 (0.16) | .086 | −0.05 (0.19) | .81 |

| Immediate feedback | −0.05 (0.15) | .74 | −0.16 (0.16) | .32 | −0.08 (0.20) | .70 |

| SVO × matching | 0.04 (0.03) | .20 | 0.02 (0.03) | .60 | 0.04 (0.04) | .27 |

| SVO × feedback | −0.00033 (0.03) | .99 | −0.03 (0.03) | .31 | 0.04 (0.04) | .35 |

3.2 Secondary Analyses

Robustness checks

To test the robustness of our primary analyses, we conducted two sets of

alternative analyses: First, we estimated models including participants

demographics (i.e., age, gender, English proficiency, and income) as

covariates. Then, we estimated the models outlined in the previous section

using only participants who correctly answered all four comprehension

questions. Results were consistent with our primary analyses (See

Appendix).

Post-decision measures

We also conducted a series of exploratory analyses of the effects of

pre-decision matching and immediate feedback on four post-decision

measures: expectations of cooperation, confidence in expectations,

anticipated satisfaction, and anticipated regret. The results are reported

in Table 4. There were no significant effects of either pre-decision

matching or immediate feedback.

| Table 4: The effects of pre-decision matching and immediate feedback on

post-decision measures. |

| | Expectation | Confidence | Anticipated satisfaction | Anticipated regret |

| | b (SE) | p | b (SE) | p | b (SE) | p | b (SE) | p |

| Pre-decision matching | −0.13 (0.16) | .40 | −0.13 (0.17) | .44 | 0.19

(0.13) | .16 | 0.21 (0.20) | .29 |

| Immediate feedback | 0.04 (0.16) | .78 | 0.11 (0.17) | .54 | −0.04 (0.14) | .74 | −0.06 (0.20) | .78 |

Post-feedback measures

Next, we examined the effects of pre-decision matching and immediate

feedback on six outcomes measured after participants learned about the

outcome of the prisoners dilemma: behavior in a mini dictator game;

experienced satisfaction with the outcome of the prisoners dilemma;

experienced regret about choices in the prisoners dilemma; enjoyment of

the experiment; the perceived fairness of the experiment; and the

perceived realism of the experiment. We also included participant payoff

(the amount of points earned in the prisoners dilemma, scaled from 0

points = −1 to 400 points = +1) as a predictor, as well as two payoff by

experimental condition interaction terms. The results are reported in

Table 5. Pre-decision matching and immediate feedback had little, if any

effect, on post feedback outcomes. Out of 24 regression coefficients

involving experimental condition, one was significant at p <

.05. Not surprisingly, participant payoff had large effects on

post-feedback outcomes: Participants who earned more in the prisoners

dilemma were more altruistic in the dictator game; were more satisfied

with their outcomes and had less regret about their choices; and enjoyed

the experiment more and perceived it as more fair.

| Table 5: The effects of payoff and experimental condition on post-feedback measures. |

| | Dictator game | Experienced satisfaction | Experienced regret | Enjoyment of experiment | Perceived fairness of experiment | Perceived realism of experiment |

| | b (SE) | b (SE) | b (SE) | b (SE) | b (SE) | b (SE) |

Constant | 0.98 (0.09)*** | 3.00 (0.09)*** | −2.73 (0.13)*** | 8.24 (0.07)*** | 8.04 (0.08)*** | 5.50 (0.11)*** |

Pre-decision matching | −0.08 (0.19) | −0.05 (0.20) | 0.38 (0.26) | −0.08 (0.14) | −0.14 (0.16) | 0.06 (0.24) |

Immediate feedback | 0.07 (0.19) | −0.17 (0.19) | −0.11 (0.25) | −0.05 (0.13) | 0.07 (0.16) | 0.06 (0.24) |

Payoff | 1.35 (0.16)*** | 4.67 (0.16)*** | −2.29 (0.22)*** | 0.72 (0.11)*** | 0.39

(0.14)** | 0.17 (0.20) |

Payoff × matching | 0.07 (0.33) | −0.00 (0.35) | 0.23 (0.47) | 0.25 (0.24) | 0.43 (0.29) | 0.35 (0.42) |

Payoff × feedback | 0.38 (0.31) | −0.10 (0.32) | −0.24 (0.43) | −0.01 (0.23) | −0.59 (0.27)* | −0.03 (0.39) |

| *** p<.001; ** p<.01; *** p<.05.

|

Waiting time

To conclude, we investigated whether waiting time (in the two pre-decision

matching conditions, N = 787) was correlated with cooperation, perceived

closeness, aversive uncertainty, or perceived realism. To account for the

non-normality of the waiting time data, we log-transformed it. There were

no significant correlations (r’s < .05, p’s > .18).

4 Discussion

Researchers often use asynchronous online experiments to examine the

psychological processes underlying cooperation. However, it is unclear how

asynchronous interactions differ psychologically from synchronous

interactions, where participants interact in real-time and receive

immediate feedback. We examined the effects of two salient features of

synchronous experiments, pre-decision matching and immediate feedback, on

cooperation in the prisoners dilemma. We found that pre-decision matching

and immediate feedback had no significant effects on cooperation (or on

how participants perceived the interaction). The present results suggest

that synchronous design features have little effect on behavior in online

experiments measuring cooperation.

4.1 Synchronous Online Experiments

In our study, we tested three hypotheses about the effects of synchronicity

on cooperation: Our first hypothesis was that pre-decision matching would

increase cooperation via reduced social distance. Pre-decision matching

had no effects on cooperation or perceived social distance; however,

social distance was associated with decreased cooperation. Previous

studies have found consistent evidence that people help and cooperate with

socially proximate interaction partners (Jones & Rachlin, 2006, 2009).

Here, we found that merely assigning participants to a specific

interaction partner is not sufficient to create feelings of social

proximity.

Our second hypothesis was that immediate feedback would increase

cooperation by reducing participants’ feelings of aversive uncertainty

about the possibility of exploitation. Immediate feedback had no effects

on cooperation and aversive uncertainty, and aversive uncertainty was not

significantly associated with cooperation. Introducing a (one-week) delay

in outcome does not substantially affect behavior in the prisoners

dilemma.

Our third hypothesis was that SVO would moderate the effects of

pre-decision matching and immediate feedback on behavior. We found that

SVO was correlated with cooperation and negatively correlated with social

distance. However, SVO did not moderate the effects of synchronicity. This

is unsurprising, as participants were generally insensitive to the

differences between experimental conditions.

We also examined the effects of synchronicity on perceptions of the realism

of the experiment: Interestingly, having participants engage in real-time

interactions had no significant effects on the perceived realism of the

experiment. Responses to our “perceived realism” question were relatively

close to the midpoint, 5 out of 10, across all conditions; and

participants did not feel socially close to their interaction partners. In

terms of realism, synchronous experiments do not convey much advantage

over asynchronous experiments. This may point to a general limitation of experiments using economic games, rather than a specific limitation of

asynchronous experiments. Other design features likely have larger effects

on the extent to which participants perceive a social dilemma experiment

as a real interaction. To increase realism, researchers may need to

provide participants with identifying information about their interaction

partners (Evans & Krueger, 2016), or allow participants to communicate

directly during the experiment (Dawes et al., 1977).

4.2 Limitations

In our study, we measured behavior using one social dilemma, the dyadic

prisoners dilemma. We cannot rule out the possibility that synchronous

designs would matter more in other settings. For example, synchronicity

could matter more in sequential (rather than simultaneous) interactions,

such as the trust game or the ultimatum game. Previous research has argued

that contextual cues play an important role in activating the norm of

reciprocity in sequential exchanges (McCabe et al., 2003). On the other

hand, research suggests that people show high levels of consistency in

their behavior across different types of experimental games (Yamagishi et

al., 2013). Researchers interested in pursuing these questions further

should be prepared for the possibility that partner matching and feedback

effects are small and relatively difficult to detect.

Additionally, our manipulation of delayed feedback focused only on one time

interval, immediate feedback versus a one-week delay. We focused on the

interval of one-week because this is a typical delay of payment in online

experiments. However, we cannot rule out the possibility that longer time

delays (one-month or longer) could have effects on aversive uncertainty

and cooperation. It is also important to consider whether our choice of

payoff stakes affected our results: We used standard payoff stakes for

online experiments (£0.50 to 4.00), which were equivalent to the payments

participants would receive 5 to 40 minutes of work on Prolific. It is

possible, but not likely, that larger payoff stakes would increase

participants’ sensitivity to pre-decision matching and delayed feedback

(Amir et al., 2012).

Finally, it is important to note that our results may have been influenced

by participant non-naivete (Chandler et al., 2014). Previous studies have

raised the possibility that experimental manipulations have weaker effects

on participants once they have become familiar with a paradigm (Chandler

et al., 2014; Rand et al., 2014). This is a general problem for online

experiments conducted on platforms like Prolific or mTurk. Pre-decision

matching and immediate feedback may have larger effects on participants

who are generally unfamiliar with social dilemmas. Moreover, some

participants may have been skeptical about the veracity of our study.

Concerns about deception and the contamination of shared subject pools are

unavoidable (Hertwig & Ortmann, 2008). Indeed, a small number of

participants sent messages indicating that they did not believe they were

actually partnered with other participants. However, we found no evidence

that belief in the realism of the study was correlated with behavior in

the prisoners dilemma.

4.3 Advice for Social Dilemmas Researchers

At this point, researchers interested in social dilemmas may wonder whether

it is worthwhile to conduct synchronous experiments: On the positive side,

our study demonstrates that it is feasible to conduct large scale studies

involving real-time partner matching and multiple time measurements.

Nearly all participants were successfully matched to partners (98%) and

participant retention was relatively high across the two waves of the

study (80%). At the same time, synchronous experiments also require a

substantial time investment (compared to asynchronous experiments

conducted using Qualtrics, or similar software).

While we did not find any effects of synchronicity on behavior, allowing

for the possibility of synchronous interactions opens up new potential

research questions. The study of social dilemmas has largely focused on

anonymous, one-shot interactions without communication and minimal social

information (Thielmann et al., 2020; Van Lange et al., 2013). However,

interactions with strangers account for a relatively small percentage

(~10%) of daily social interactions (Columbus et al., 2021). Arguably,

this focus has been influenced by the relative ease of running

asynchronous experiments. As our study demonstrates, software packages

such as oTree (Chen et al., 2016) are making it easier for researchers to

conduct high-powered studies of cooperation that go beyond

zero-acquaintance interactions.

4.4 Conclusion

How does synchronicity influence perception and behavior in online

experiments measuring cooperation? We found that pre-decision matching and

immediate feedback had no significant effects on behavior in the prisoners

dilemma or on how participants perceived the interaction. The present

results suggest that synchronous designs and asynchronous designs can

produce similar results in studies of online cooperation.

5 References

Amir, O., Rand, D. G., & Gal, Y. K. (2012). Economic games on the

internet: The effect of $1 stakes. PloS one, 7(2),

e31461.

Arechar, A. A., Gächter, S., & Molleman, L. (2018). Conducting interactive

experiments online. Experimental Economics, 21(1),

99–131.

Balliet, D., Wu, J., & De Dreu, C. K. (2014). Ingroup favoritism in

cooperation: A meta-analysis. Psychological Bulletin,

140(6), 1556–1581.

Birnbaum, M. H. (Ed.). (2000). Psychological experiments on the internet.

San Diego: Academic Press.

Bogaert, S., Boone, C., & Declerck, C. (2008). Social value orientation

and cooperation in social dilemmas: A review and conceptual model.

British Journal of Social Psychology, 47(3), 453–480.

Brandts, J., & Charness, G. (2011). The strategy versus the

direct-response method: a first survey of experimental comparisons.

Experimental Economics, 14(3), 375–398.

Buhrmester, M., Kwang, T., & Gosling, S. D. (2011).

Amazon’s Mechanical Turk: A new source of inexpensive,

yet high-quality, data? Perspectives on Psychological

Science, 6(1), 3–5.

Casari, M., & Cason, T. N. (2009). The strategy method lowers measured

trustworthy behavior. Economics Letters, 103(3),

157–159.

Chandler, J., Mueller, P., & Paolacci, G. (2014). Nonnaïveté among Amazon

Mechanical Turk workers: Consequences and solutions for behavioral

researchers. Behavior Research Methods, 46(1), 112–130.

Chandler, J., Paolacci, G., Peer, E., Mueller, P., & Ratliff, K. A.

(2015). Using nonnaive participants can reduce effect sizes.

Psychological Science, 26(7), 1131–1139.

Chen, D. L., Schonger, M., & Wickens, C. (2016). oTree—An open-source

platform for laboratory, online, and field experiments. Journal of

Behavioral and Experimental Finance, 9, 88–97.

Chmielewski, M., & Kucker, S. C. (2020). An MTurk crisis? Shifts in data

quality and the impact on study results. Social Psychological and

Personality Science, 11(4), 464–473.

Columbus, S., Molho, C., Righetti, F., & Balliet, D. (2021).

Interdependence and cooperation in daily life. Journal of

Personality and Social Psychology, 120(3), 626–650.

Dawes, R., McTavish, J., & Shaklee, H. (1977). Behavior, communication,

and assumptions about other people’s behavior in a

commons dilemma situation. Journal of Personality and Social

Psychology, 35(1), 1–11.

Dawes, R. M. (1980). Social dilemmas. Annual Review of

Psychology, 31(1), 169–193.

Dorrough, A. R., & Glöckner, A. (2019). A cross-national analysis of sex

differences in prisoner’s dilemma games. British

Journal of Social Psychology, 58(1), 225–240.

Epstein, Z., Peysakhovich, A., & Rand, D. G. (2016). The good, the bad,

and the unflinchingly selfish: Cooperative decision-making can be

predicted with high accuracy when using only three behavioral types.

Proceedings of the 2016 ACM Conference on Economics and Computation,

Evans, A. M., & Krueger, J. I. (2016). Bounded prospection in dilemmas of

trust and reciprocity. Review of General Psychology,

20(1), 17–28.

Faul, F., Erdfelder, E., Buchner, A., & Lang, A.-G. (2009). Statistical

power analyses using G* Power 3.1: Tests for correlation and regression

analyses. Behavior Research Methods, 41(4), 1149–1160.

Goette, L., Huffman, D., & Meier, S. (2006). The impact of group

membership on cooperation and norm enforcement: Evidence using random

assignment to real social groups. American Economic

Review, 96(2), 212–216.

Gosling, S. D., Vazire, S., Srivastava, S., & John, O. P. (2004). Should

we trust web-based studies? A comparative analysis of six preconceptions

about internet questionnaires. American Psychologist,

59(2), 93–104.

Hauser, O. P., Hendriks, A., Rand, D. G., & Nowak, M. A. (2016). Think

global, act local: Preserving the global commons. Scientific

Reports, 6, 36079.

Hertwig, R., & Ortmann, A. (2008). Deception in experiments: Revisiting

the arguments in its defense. Ethics & Behavior, 18(1),

59–92.

Horton, J. J., Rand, D. G., & Zeckhauser, R. J. (2011). The online

laboratory: Conducting experiments in a real labor market.

Experimental Economics, 14(3), 399–425.

Joireman, J. A., Van Lange, P. A., & Van Vugt, M. (2004). Who cares about

the environmental impact of cars? Those with an eye toward the future.

Environment and Behavior, 36(2), 187–206.

Jones, B., & Rachlin, H. (2006). Social discounting. Psychological

Science, 17(4), 283–286.

Jones, B., & Rachlin, H. (2009). Delay, probability, and social

discounting in a public goods game. Journal of the Experimental

Analysis of Behavior, 91(1), 61–73.

Kogler, C., Mittone, L., & Kirchler, E. (2016). Delayed feedback on tax

audits affects compliance and fairness perceptions. Journal of

Economic Behavior & Organization, 124(100), 81–87.

Kogut, T., & Ritov, I. (2005a). The “identified victim” effect: An

identified group, or just a single individual? Journal of

Behavioral Decision Making, 18(3), 157–167.

Kogut, T., & Ritov, I. (2005b). The singularity effect of identified

victims in separate and joint evaluations. Organizational Behavior

and Human Decision Processes, 97(2), 106–116.

Kortenkamp, K. V., & Moore, C. F. (2006). Time, uncertainty, and

individual differences in decisions to cooperate in resource dilemmas.

Personality and Social Psychology Bulletin, 32(5),

603–615.

Lee, S., & Feeley, T. H. (2016). The identifiable victim effect: A

meta-analytic review. Social Influence, 11(3), 199–215.

Liberman, V., Samuels, S. M., & Ross, L. (2004). The name of the game:

Predictive power of reputations versus situational labels in determining

prisoner’s dilemma game moves. Personality and Social Psychology

Bulletin, 30(9), 1175–1185.

Loewenstein, G. (1987). Anticipation and the valuation of delayed

consumption. The Economic Journal, 97(387), 666–684.

McCabe, K. A., Rigdon, M. L., & Smith, V. L. (2003). Positive reciprocity

and intentions in trust games. Journal of Economic Behavior &

Organization, 52(2), 267–275.

Muehlbacher, S., Mittone, L., Kastlunger, B., & Kirchler, E. (2012).

Uncertainty resolution in tax experiments: Why waiting for an audit

increases compliance. The Journal of Socio-Economics,

41(3), 289–291.

Murphy, R. O., & Ackermann, K. A. (2014). Social value orientation:

Theoretical and measurement issues in the study of social preferences.

Personality and Social Psychology Review, 18(1), 13–41.

Murphy, R. O., Rapoport, A., & Parco, J. E. (2006). The breakdown of

cooperation in iterative real-time trust dilemmas. Experimental

Economics, 9(2), 147–166.

Nishi, A., Christakis, N. A., & Rand, D. G. (2017). Cooperation, decision

time, and culture: Online experiments with American and Indian

participants. PloS one, 12(2), e0171252.

Rand, D., Peysakhovich, A., Kraft-Todd, G., Newman, G., Wurzbacher, O.,

Nowak, M., & Greene, J. (2014). Social heuristics shape intuitive

cooperation. Nature Communications, 5, 3677–3677.

Romano, A., Balliet, D., Yamagishi, T., & Liu, J. H. (2017). Parochial

trust and cooperation across 17 societies. Proceedings of the

National Academy of Sciences, 114(48), 12702–12707.

Small, D. A., & Loewenstein, G. (2003). Helping a victim or helping the

victim: Altruism and identifiability. Journal of Risk and

Uncertainty, 26(1), 5–16.

Small, D. A., Loewenstein, G., & Slovic, P. (2007). Sympathy and

callousness: The impact of deliberative thought on donations to

identifiable and statistical victims. Organizational Behavior and

Human Decision Processes, 102(2), 143–153.

Thielmann, I., Spadaro, G., & Balliet, D. (2020). Personality and

prosocial behavior: A theoretical framework and meta-analysis.

Psychological Bulletin, 146(1), 30–90.

Van Lange, P. A. (1999). The pursuit of joint outcomes and equality in

outcomes: An integrative model of social value orientation.

Journal of Personality and Social Psychology, 77(2),

337–349.

Van Lange, P. A., Joireman, J., Parks, C. D., & Van Dijk, E. (2013). The

psychology of social dilemmas: A review. Organizational Behavior

and Human Decision Processes, 120(2), 125–141.

Yamagishi, T., Li, Y., Takagishi, H., Matsumoto, Y., & Kiyonari, T.

(2014). In search of Homo economicus. Psychological

Science, 25(9), 1699–1711.

Yamagishi, T., Mifune, N., Li, Y., Shinada, M., Hashimoto, H., Horita, Y.,

Miura, A., Inukai, K., Tanida, S., & Kiyonari, T. (2013). Is behavioral

pro-sociality game-specific? Pro-social preference and expectations of

pro-sociality. Organizational Behavior and Human Decision

Processes, 120(2), 260–271.

Appendix

Experiment Materials

Prisoners Dilemma Instructions (Screen 1)

In the next part of this study, you will play a game.

You will be randomly paired with another participant. Each of you

simultaneously and privately chooses Keep or Transfer. Your payoffs will

be determined by your choice and the other participant’s choice:

In each cell, the amount (in points) to the left is the payoff for you and

the amount to the right is the payoff for the other participant.

| | | The Other Participant |

| | | Transfer | Keep |

| You: | Transfer | 200 points, 200 points | 0 point, 300 points |

| | Keep | 300 points, 0 point | 100 points, 100 points |

You will receive a bonus payment based on the total number of points you

earn. 100 points = $1.00.

Before you continue, please answer the following comprehension questions:

If both you and the other participant choose “Transfer”, how many points

will you receive? [0, 100, 200, 300]

If both you and the other participant choose “Keep”, how many points will

you receive? [0, 100, 200, 300]

If you choose “Keep” and the other participant choose “Transfer”, how many

points will you receive? [0, 100, 200, 300]

If you choose “Transfer” and the other participant choose “Keep”, how many

points will you receive? [0, 100, 200, 300]

Time Delay Instructions (Screen 2)

[Pre-decision matching with Immediate feedback]

You will learn about the outcome of the game as soon as you and the other

participant make your decisions.

[Pre-decision matching with Delayed feedback]

[Post-decision matching with Delayed feedback]

You will learn about the outcome of the game in one week (when we complete

data collection for this experiment.

The date today is X. This means you will be contacted one week from today,

on X.

Partner matching screen (Screen 3)

[Participants can not proceed until their partner has also read and

progressed through the instruction screen]

Decision Screen (Screen 4)

| | | The Other Participant |

| | | Transfer | Keep |

| You: | I will transfer | 200 points, 200 points | 0 point, 300 points |

| | I will keep | 300 points, 0 point | 100 points, 100 points |

Additional Analyses

We tested the effects of including covariates and excluding participants

who did not pass our comprehension checks on our results. Covariate models

also included age, gender, income, and English proficiency as predictors:

There were fourteen income levels (ranging from “less than £10,001 per

year” to “over £100,000 per year”) and four levels of English proficiency

(fluent, advanced, basic, poor); and these two variables were entered into

our models using dummy coding. The results across models are reported in

Table A1.

| Table 6: Analyses including covariates and excluding participants who did

not pass comprehension checks |

| | Main analyses | Covariates

included | Exclude incorrect

comprehension resps. |

| | b | SE | p | b | SE | p | b | SE | p |

Effect of pre-decision matching on cooperation | −0.19 | 0.15 | .20 | −0.14 | 0.23 | .53 | −0.07 | 0.17 | .70 |

Effect of immediate feedback on cooperation | −0.05 | 0.15 | .75 | 0.03 | 0.19 | .87 | −0.09 | 0.17 | .59 |

| SVO-by-condition interactions: | | | | | |

SVO × matching | 0.04 | 0.03 | .21 | 0.04 | 0.04 | .23 | 0.06 | 884 | .14 |

SVO × feedback | 0.00013 | 0.03 | 1 | −0.01 | 0.04 | .86 | −0.02 | 0.04 | .69 |

This document was translated from LATEX by

HEVEA.