Judgment and Decision Making, Vol. 14, No. 4, July, 2019, pp. 488-512

Information search in everyday decisions: The generalizability of the attraction search effect

Sophie E. Scharf* # Monika Wiegelmann$

Arndt Bröder$ |

The recently proposed integrated coherence-based decisions and

search model (iCodes) makes predictions for search behavior in

multi-attribute decision tasks beyond those of classic decision-making

heuristics. More precisely, it predicts the Attraction Search

Effect that describes a tendency to search for information for the

option that is already attractive given the available evidence. To date,

the Attraction Search Effect has been successfully tested using a

hypothetical stock-market game that was highly stylized and specifically

designed to be highly diagnostic. In three experiments, we tested whether

the Attraction Search Effect generalizes to different semantic contexts,

different cue-value patterns, and a different presentation format than

the classic matrix format. Across all experiments, we find evidence for

information-search behavior that matches iCodes’s information-search

prediction. Therefore, our results corroborate not only the

generalizability of the Attraction Search Effect in various contexts but

also the inherent process assumptions of iCodes.

Keywords: attraction search effect, information search, generalizability

1 Introduction

When faced with a decision, we often have to search for information that

enables us to weigh the advantages and disadvantages of each option against

each other. Information search is especially important, if the decision at

hand has non-trivial consequences, such as when buying a car, deciding on a

job offer, or taking out insurance. Despite the importance of information

search for decision making, psychological decision-making models have

usually focused more on the processes of integrating information rather

than the processes behind searching for information

(Gigerenzer et al., 2014).

Aware of this lack of specified information-search process models,

(Jekel et al., 2018) recently extended the parallel constraint

satisfaction model for decision making

(PCS-DM; Glöckner et al., 2014) to include information search in

multi-attribute decision tasks. The new integrated coherence-based decision

and search model (iCodes) makes detailed predictions for the

information-search process in multi-attribute decisions

(Jekel et al., 2018). One core prediction of iCodes is the

Attraction Search Effect, which states that people tend to search

for information about the option that is currently supported by the already

available evidence. The Attraction Search Effect and iCodes itself have

received initial support from three experiments and the reanalyses of five

already published experiments (Jekel et al., 2018).

The original experiments by (Jekel et al., 2018) used a

probabilistic-inference task presented as a hypothetical stock-market game

with cue-value patterns that were specifically designed to be highly

diagnostic for the Attraction Search Effect. In our view, it is essential

to demonstrate that the support for the Attraction Search Effect found by

(Jekel et al., 2018) was not due to arbitrary design choices in their

studies. The goal of the present work is to test the generalizability of

the Attraction Search Effect to different settings. With data from three

online experiments, we test whether the Attraction Search Effect replicates

in different, more diverse semantic context settings. As a next step, we investigate whether the

Attraction Search Effect can be found with randomized cue-value patterns as

well. Finally, we evaluate whether the Attraction Search Effect also

emerges when information is not presented in a classic mouselab-type

setting (first introduced by Johnson et al., 1989, referred to as mouselab in the

following) but in a more realistic, simulated online

shop. Since iCodes is a new model, demonstrating that its core prediction

generalizes to different settings strengthens the relevance and reach of

the model.

In the following paragraphs, we will first take a closer look at iCodes’s prediction of information search in general and the Attraction Search Effect specifically. After presenting already existing evidence for iCodes’s core prediction, we will argue why generalizability is an important issue and present data from three experiments that test exactly this generalizability of the Attraction Search Effect. In these three studies, we gradually move away from the original study setup by (a) demonstrating the Attraction Search Effect in other semantic domains, (b) extending the range of domains and relaxing the cue-value patterns, and (c) moving away from the matrix format in a simulated online-shop setting.

2 The integrated, coherence-based decision and search model

The original PCS-DM is a network model that successfully predicts choices,

decision times, and decision confidence for multi-attribute decisions in

different contexts

(Glöckner et al., 2012,Glöckner et al., 2014,GlöcknerBetsch, 2012,GlöcknerHodges, 2010,Glöckner et al., 2010).

However, one shortcoming of PCS-DM is that it models information

integration only and is thus applicable only to decision situations that do

not require information search (Marewski, 2010).

Therefore, (Jekel et al., 2018) have recently extended PCS-DM to include

information-search processes. This new model shares in principle the same

basic network structure and the same assumptions regarding the underlying

decision process with its predecessor PCS-DM. The crucial extension is an

additional layer of nodes that is included in the network structure. This

layer represents the cue values present in the decision situation. In the

following paragraphs, we will introduce how iCodes specifies the

information-search process and how it predicts the Attraction Search

Effect. For the exact model specification and formalization, please refer

to (Jekel et al., 2018).

2.1 The prediction of information search in iCodes

In a multi-attribute decision task, the decision maker is presented with at

least two options for which information is provided in the form of

attributes or cues (HarteKoele, 2001). Depending on the specific

task, the goal of the decision maker is to either choose the option that

maximizes an objective criterion value (Glöckner et al., 2010),

such as buying the most successful stock, or to choose the option that

maximizes a subjective criterion value (Payne et al., 1993), such as

buying the preferred sweater. The cues provide information about the

options in form of cue values that can be positive evaluations of the

respective option, often represented by a "+", or negative evaluations,

often represented by a "−". In probabilistic-inference tasks, the cues

usually differ in their validity, that is, they differ in how often they

correctly evaluate an option as better than the other option(s) on the

objective criterion (GigerenzerGoldstein, 1996). Besides positive and

negative evaluations, cue values can also be hidden and have to be searched

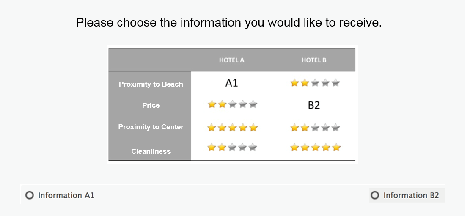

for, which is represented by a "?". An example trial of such a

multi-attribute decision task with two options and two cues is shown in

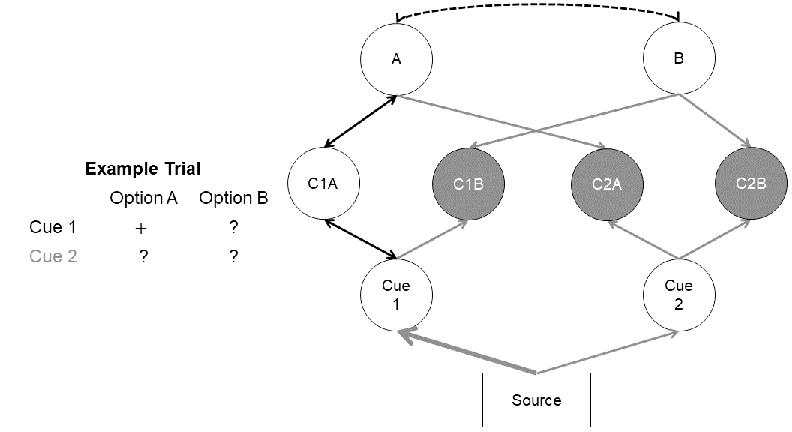

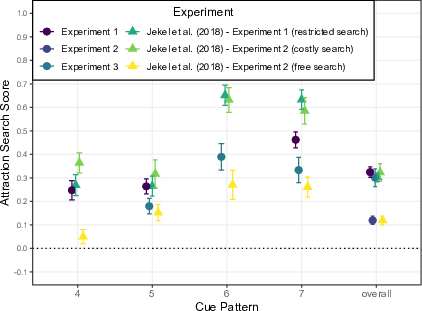

Figure 1.

| Figure 1: The translation of a probabilistic-inference

task into the network structure of iCodes. In this example task, the

first cue, which is more valid than the second cue, makes a positive

statement regarding Option A and all other information is still

hidden. The options are represented by the option nodes in the top layer

of the network and are connected by an inhibitory, bidirectional link

(dashed line). The cue values are included in the next layer of nodes

where the white node represents the already available information and

the gray nodes represent still concealed information. Below the layer of

cue-value nodes is the layer of cue nodes. The source node on the bottom

of the network initializes the spread of activation. The activation the

cue nodes receive is proportional to their respective validities, as

indicated here by the thickness of the link. The black arrows in the

network represent bidirectional links, whereas gray arrows represent

unidirectional links. Adapted from "A new and unique prediction for

cue-search in a parallel-constraint satisfaction network model: The

attraction search effect," by M. Jekel, A. Glöckner, and A. Bröder,

2018, Psychological Review, 125, p. 746. Copyright 2018 by the

American Psychological Association. |

The information in such a multi-attribute decision task is represented in

iCodes as a network (Jekel et al., 2018). There are nodes for the

options, cues, and cue values that are connected via links as depicted in

Figure 1. The information-search process of iCodes is

modeled as a spread of activation through this network that is initiated by

the source node at the bottom of the network. Activation is spread between

nodes via the connecting links. The spread of activation continues until

the activation of each node has stabilized and, therefore, does not change

substantially anymore. At this point, the network as a whole is stable and

the model predicts that the concealed cue value whose node received the

most activation during this process is opened next.

The activation, that concealed cue-value nodes receive, stems from two

sources in the network (Jekel et al., 2018). These sources are the option and cue nodes that

are connected to searchable cue values via unidirectional links. Thus,

nodes of concealed cue values receive activation only but do not continue

the spread of activation further. These links are unidirectional to

represent that concealed cue values do not carry any information with

regard to the options or cues. Note that once a concealed cue value is

opened the unidirectional links become bidirectional indicating that the

information of this cue value is now available. The amount of activation

that nodes of searchable cue values receive from cue nodes is proportional

to their respective validities. Thus, the higher the validity of a cue, the

more activation the corresponding cue-value nodes receive. The activation

received from the option nodes depends on the current evidence for the

options. Thus, the more the current evidence favors one option over

another, the more activation the corresponding cue-value nodes receive -

via the links between cue-value nodes and options. Both sources of

activation are assumed to influence search in an additive

manner. Therefore, both the respective cue’s validity and the respective

option’s evidence determine iCodes’s search prediction for a concealed cue

value.

2.1.1 The Attraction Search Effect

Formal models that predict

information search in multi-attribute decision tasks often assume that

information is searched for cue-wise or option-wise and most often

following the order of cues’ validities

(Payne et al., 1988,LeeCummins, 2004,GigerenzerGoldstein, 1996). These

search directions are assumed to be independent of the already available

evidence. In the example trial in Figure 1, in which one

cue value is already available, these models would therefore predict that

the valence of this cue value would not matter for whether information is

searched cue-wise or option-wise. ICodes, however, predicts that the

already available evidence influences information search

(Jekel et al., 2018). This is due to the fact that iCodes assumes a joint

influence of the cues’ validities and the options’ current attractiveness

on information search. The influence of the cues’ validities leads to

iCodes’s prediction that, all things being equal, cue values from highly

valid cues are more likely to be searched for than cue values from less

valid cues. The influence of the current evidence on information search in

the formalized iCodes model also leads to an additional qualitative search

prediction: Cue values with information on the currently preferred option

are more likely to be searched for than cue values with information on the

less attractive option. This prediction has been coined as the Attraction

Search Effect by (Jekel et al., 2018).

Searching information on the currently attractive option has also been

shown in information-search paradigms outside the realm of

probabilistic-inference tasks. One common observation is information-search

behavior consistent with selective exposure

(Frey, 1986,Hart et al., 2009,FischerGreitemeyer, 2010). Selective

exposure is the tendency to search for information that supports the

currently preferred option. In the literature, this pattern of information

search is often considered to mainly stem from the motivation to defend

one’s prior beliefs or prior position

(Hart et al., 2009,FischerGreitemeyer, 2010).1 In the standard paradigm of selective exposure

subjects, therefore, know the valence of the searchable information a

priori (Fischer et al., 2011). This a priori knowledge constitutes

the key difference of selective exposure and the Attraction Search

Effect. The Attraction Search Effect cannot be driven merely by the

motivation to defend one’s preferred option since this would require

knowing beforehand whether the concealed information supports or

contradicts the currently attractive option. Rather, the mechanism of

information search in iCodes is to find information that potentially

increases the coherence of the decision situation.2

Two other phenomena that have been described in the literature predict

search behavior similar to the Attraction Search Effect: pseudodiagnostic

search in hypothesis testing

(Doherty et al., 1979,Mynatt et al., 1993) and

leader-focused search (CarlsonGuha, 2011). Pseudodiagnostic

search describes that individuals tend to search for information about

their current hypothesis only and fail to test the alternative

hypothesis. This behavior is particularly observed when the first piece of

found information supports the currently tested hypothesis

(Mynatt et al., 1993). The aforementioned failure to test

alternative hypotheses is problematic as a cue is only diagnostic for a

hypothesis test when its values are known for both hypotheses.

In the case of leader-focused search, information-search behavior is also

characterized as searching for information on the currently preferred

option (the leader) independently of the expected valence of this

information

(CarlsonGuha, 2011). (CarlsonGuha, 2011)

could show that this preference for information on the leader is so strong

that subjects preferred negative information on the leader compared to

negative information on the trailer (the currently less preferred option).

Similar cognitive explanations have been proposed for both pseudodiagnostic

and leader-focused search. (Evans et al., 2002) proposed that

pseudodiagnostic search results from a habitual focus on one hypothesis

only and individuals tend to ignore other, alternative

hypotheses. Similarly, (CarlsonGuha, 2011) refer to

focalism (Wilson et al., 2000) as a possible underlying

mechanism for leader-focused search in that individuals focus on the

current leader and subsequently ignore other options. Thus, besides

different theoretical underpinnings, the only difference between

leader-focused search and the Attraction Search Effect is that for the

former effect subjects are asked which option is more attractive whereas

for the latter effect the attractiveness of the options is manipulated via

cue-value patterns. Both phenomena, pseudo-diagnostic and leader-focused search, are similar to the

search pattern predicted by iCodes but lack an explicit theoretical model

formalizing the underlying processes of this type of search behavior. With

iCodes, there is now a computational, formal model that allows precise

predictions of when and how strong the information search direction should

be biased towards the currently more attractive option. Hence, our

explanation does not contradict the theories mentioned above, but the

observed focalism may be the result of an underlying coherence-maximizing

mechanism.

When focusing on probabilistic-inference tasks, different models have been

proposed that predict information search, such as heuristics as part of the

adaptive toolbox (e.g., GigerenzerTodd, 1999,Payne et al., 1988)

and models of the class of evidence accumulation models

(e.g., HausmannLäge, 2008,LeeCummins, 2004). However, the

prediction of the Attraction Search Effect is unique compared to these

formalized models as they base only their prediction of the stopping of

information search on the available information. The predicted direction of

information search, however, in these types of models relies solely on

external criteria such as the cues’ validities. Yet, in iCodes, the

information-search prediction depends on the additive effects of

validity-driven cue-node activations and attractiveness-driven option-node

activations on the activations of concealed cue-value nodes

(Jekel et al., 2018). Thus, the Attraction Search Effect follows from the

joint effects of validity and the current attractiveness of the options.

2.1.2 Evidence for the Attraction Search Effect

The Attraction Search Effect was tested by (Jekel et al., 2018) in two

experiments. In both experiments, they used an artificial stock-market game

in which subjects had to choose the more successful of two stocks based on

expert judgments that differed in their respective validities. For this

stock-market game, the authors specifically designed half-open cue-value

patterns that were highly diagnostic for the Attraction Search Effect. The

diagnosticity of the patterns was achieved by creating two versions of each

cue-value pattern such that in the first version (Version a) the Option A

is more attractive than Option B and that in the second version (Version b)

the Option B is more attractive than Option A. The change of attractiveness

between the two versions was achieved by changing one or two cue

values. With these two pattern versions, it was possible to calculate a

qualitative Attraction Search Score that represents the difference of

probabilities of behavior consistent with the Attraction Search Effect and

behavior inconsistent with the Attraction Search Effect. Behavior was

consistent with the Attraction Search Effect when subjects searched for the

attractive Option A in Version a and behavior was inconsistent when

subjects searched for the unattractive Option A in Version b of the

cue-value patterns;

Attraction Search Score = p(Searching for Option A | Version

a)−p(Searching for Option A | Version b). Thus, the Attraction

Search Score is positive if subjects followed iCodes’s predictions for

information search and zero if subjects did not change their direction of

search depending on the attractiveness of the options.

In the first experiment, (Jekel et al., 2018) presented the half-open

cue-value patterns to subjects and restricted information search to one

piece of information. In the second experiment, (Jekel et al., 2018) did

not restrict information search but manipulated whether information search

was costly or free. Both experiments show strong support for the Attraction

Search Effect; though, the effect was less pronounced when information

search was free. These initial results received further support in a

reanalyses of five published experiments that also used a hypothetical

stock-market game but were not specifically designed to test for the

Attraction Search Effect. In addition, iCodes fit the observed

information-search behavior quantitatively well and this fit depended on

the influence of options’ attractiveness in the model. Thus, there is

initial support for iCodes’s information-search predictions in

probabilistic-inference tasks in the semantic context of an abstract and

stylized hypothetical stock-market game.

3 The importance of model generalizability

With the recent extension of PCS-DM to iCodes and the presented empirical

support for one of iCodes’s core predictions, iCodes can be considered as a

general theory for the decision process that incorporates information

search, information integration, and decisions. As a general theory of

decision making and information search, iCodes’s predictions should be

applicable to a broad range of different (multi-attribute) decision

situations. A strict test of the applicability of a theory can be achieved

by conducting a conceptual replication that varies experimental variables

of the original studies (Makel et al., 2012). Conceptual

replications ensure that the original results are not due to task or

situational characteristics of the previous operationalizations but can be

attributed with greater confidence to the processes specified by the theory

(Bredenkamp, 1980). In our conceptual replications, we want to

test whether iCodes’s prediction for information-search behavior

generalizes to different contexts.

In the previous studies testing iCodes, several aspects of the decision

task have been kept constant that should be varied in a conceptual

replication. One of these aspects is the semantic setting of the decision

task. All experiments conducted and reanalyzed by (Jekel et al., 2018)

have used a probabilistic-inference task semantically set in a hypothetical

stock-market scenario. The hypothetical stock-market game is a commonly

used multi-attribute decision task

(Bröder, 2003,Bröder, 2000,Newell et al., 2003)

that allows explicit control over different decision parameters, such as

validities, and allows observation of information-search and decision

behavior relatively unbiased by previous knowledge. Yet, at the same time

and somewhat due to the high level of control, the hypothetical

stock-market game is a highly artificial setting that lacks ties to the

actual daily experiences of subjects. Further, a decision between stocks is

only one instance of all possible decisions and such a neglect of stimulus

sampling in an experiment is not only problematic with regard to the

generalizability of results but also might dilute the validity of the

causal inference (WellsWindschitl, 1999). ICodes’s predictions should, therefore,

apply to a range of different and possibly more realistic semantic

contexts. Testing different semantic contexts is especially relevant as

prior work on leader-focused and pseudodiagnostic search has used a wide

range of different decision contexts

(Evans et al., 2002,Mynatt et al., 1993,CarlsonGuha, 2011). Thus,

it is important to show that the Attraction Search Effect generalizes to

different content domains as well.

Second, the cue-value patterns used to elicit the Attraction Search Effect

have been kept constant between experiments. These patterns were

specifically designed to be highly diagnostic for the Attraction Search

Effect. However, as a general theory of decision making, iCodes’s

predictions should not be confined to a specific set of cue-value patterns

but should be applicable in other cue-value constellations as well. The

cue-value patterns have already been varied to some extent in the

reanalyses of previously run studies in (Jekel et al., 2018). These

reanalyses have, however, all used the same context settings, namely a

stock-market game.

A third aspect that was not varied between experiments is the way the

information for the current decision task was presented. In all

experiments, the cue values were presented in the matrix format of a

typical mouselab task. Presenting information this way makes the relevant

information highly accessible, facilitates information search itself, and

might even influence the subsequent processing of information

(Söllner et al., 2013). Yet, in many real-life decision tasks,

the necessary information is often presented in a more complex fashion than

in a matrix arranged according to cue validity. Thus, in order to claim

that iCodes is general theory of decision making, it is important to show

that the Attraction Search Effect still emerges when information is

structured differently.

The current experiments successively relaxed the restrictions inherent in

(Jekel et al., 2018) demonstrations of the Attraction Search

Effect. First, we extended the semantic contexts to various decision

domains beyond the stock-market game in all three experiments, using 13

different decision contexts altogether. Second, we also used cue-value

patterns different from the original ones (Experiment 2). Finally, we

disposed of the commonly used restrictive matrix format of information

presentation that is prevalent in many studies investigating information

search in decision making (Experiment 3). By relaxing many of the

restrictions inherent in Jekel et al.’s (2018) original experiments, we

aim to replicate the Attraction Search Effect in different decision

contexts and thus test the limits of its generalizability.

4 Experiment 1: Extension to different decision domains

The first experiment used cue-value patterns from the experiments by

(Jekel et al., 2018) but in a selection of six different semantic

contexts. As we are interested in whether iCodes can predict information

search in different contexts, we will concentrate solely on information

search as the dependent variable in this and the following

experiments. Thus, we will not analyze subjects’ choices.

4.1 Method

4.1.1 Materials

Content scenarios.

We constructed six different content

scenarios for the decision task that represented mainly preferential

decisions. These scenarios ranged from choosing a hotel to deciding which

weather forecast to trust when planning a trip. One of the scenarios is the

task to choose which of two cities is larger, commonly known as

city size task, and was added to relate to earlier research

(e.g., GigerenzerTodd, 1999). For every scenario, we chose four

cues relevant to this decision. As the validity of these cues is mostly

subjective, cues were ordered by our assumed importance for each

scenario. To validate our assumptions, subjects were asked after the task

for their subjective rating of importance of the cues. The content

scenarios and the respective cues are displayed in

Table A1 in Appendix 7.2. To make the

decision task less abstract, we further changed the format of the cue

values from "+" and "−" to different pictoral formats, such as a five-

vs. two-star ratings, thumbs-up vs. thumbs-done icons, or "yes" vs. "no"

icons for the city size scenario.3

Cue patterns.

In this experiment, we used a subset of the

original cue-value patterns from

(Jekel et al., 2018). (Jekel et al., 2018) designed their cue-value

patterns in pairs such that two versions of the same pattern differed in

one or two cue values, so that either Option A or Option B was more

attractive (see Table 1). For the present experiment, we

selected three cue patterns from Jekel et al.’s (2018) studies.

Pattern 3 was selected because it illicited the strongest Attraction Search

Effect in Jekel et al.’s (2018) studies, with an Cohen’s d

ranging from 0.81 to 2.66. Patterns 1 and 2 showed the third and fourth

strongest Attraction Search Effect, respectively, in the original studies,

with Cohen’s d ranging from 0.22 to 1.15 and from 0.61 to 0.92,

respectively. These cue-value patterns were chosen to increase our chances

to find an Attraction Search Effect under more relaxed experimental

conditions.

| Table 1: Version a and Version b of cue patterns used in Experiment 1. |

| | Pattern 1 | Pattern 2 | Pattern 3 |

| | A | B | A | B | A | B |

|

Cue 1 | ? | −(+) | +(−) | ? | +(−) | −(+) |

Cue 2 | − | ? | +(−) | ? | ? | ? |

Cue 3 | + | − | ? | ? | + | − |

Cue 4 | − | + | ? | ? | − | ? |

| Note. + = positive cue value, − = negative cue value, ? = hidden, searchable cue value; Version a of patterns is displayed, cue values in parentheses are from Version b. Patterns 1, 2, and 3 correspond to Patterns 4, 5, and 7, respectively, in (Jekel et al., 2018). |

4.1.2 Measures

Subjective importance of cues.

To assess the subjective importance of the cues, subjects were asked to rate each cue on how important they thought the cue was for their decision on a scale from 0 to 100, with zero representing not important at all and 100 representing extremely important. The purpose of this measure was to check whether the assumed validity ordering corresponded to the actual importance ordering by subjects.

Attraction search score.

Just as in the study by

(Jekel et al., 2018), we computed the individual Attraction Search Scores

as the difference of the probability of searching for Option A in Version a

vs. in Version b across the three cue-value patterns,

Attraction Search Score = p(Searching Option A |

Version a)−p(Searching Option A | Version b).4 As mentioned above, the first probability represents the probability of

behavior consistent with the Attraction Search Effect, whereas the second

probability represents the probability of behavior inconsistent with the

Attraction Search Effect. Thus, if the Attraction Search Score is larger

than zero, subjects show more behavior in line with the Attraction Search

Effect.

4.1.3 Design and procedure

Each subject was presented with each of six content scenarios and with each

of the six patterns (three patterns in two versions each). To avoid large

trial numbers which are suboptimal for online studies, the variable

Scenario with six levels and the variable Pattern with six levels (three pattern with two versions each) were

balanced using a latin square design which resulted in six experimental

groups. Therefore, each experimental group was exposed to every pattern and

every content scenario. After opening the online study and agreeing to an

informed consent, subjects provided demographic information before working

on the actual task. In each of the six trials subjects were familiarized

with the decision context and could then search for one piece of additional

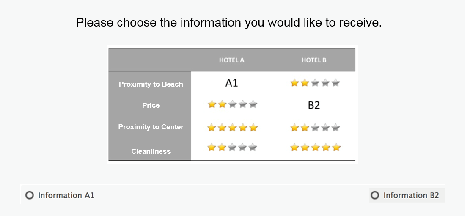

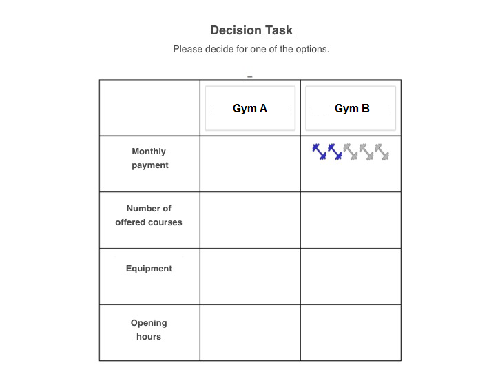

information. A picture of the task setup can be found in

Figure 2. After seeing the additional piece of

information, subjects had to choose one of the options. When the decision

task was completed, subjects filled out the subjective importance measure

for each of the scenario’s cues.

| Figure 2: A translated (from German) screenshot

of the decision task in Experiment 1. The current cue-value pattern is

Pattern 1 in Version a. Subjects could search for information by

selecting the radio button for the corresponding piece of information in

the matrix. On the next screen, the searched-for information appeared in

the decision matrix and subjects could choose one of the options. |

4.1.4 Subjects

The online experiment was conducted with the program Unipark

(Questback, 2016). Subjects were recruited online via the

registration system of the University of Mannheim and via online platforms

such as Facebook research groups. The data collection yielded a sample of

303 subjects (201 female, 47.5 % university students, Mage = 33.7,

SDage = 15.5, age range 17–70). Subjects could decide whether they

participated for course credit or entered a lottery to win a 15€

online-shop gift certificate.

4.2 Results

All following analyses were conducted with R

(R Core Team, 2019). All plots were created by using the

ggplot2 package (Wickham, 2016), mixed model

analyses were run with the packages lme4

(Bates et al., 2015) and lmerTest

(Kuznetsova et al., 2017).

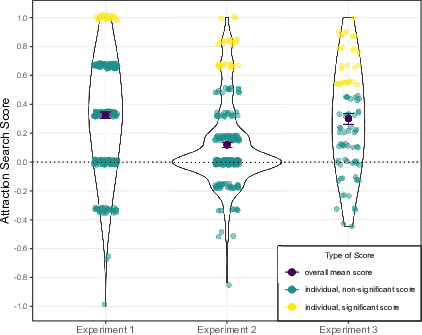

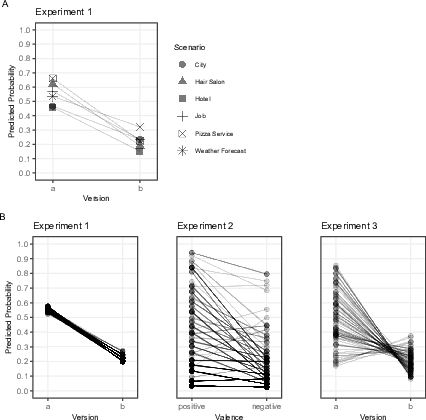

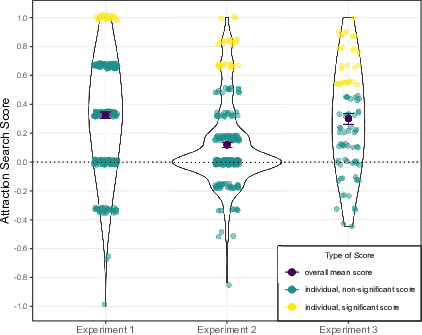

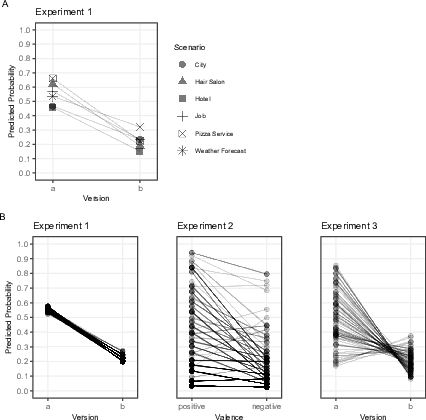

| Figure 3: Distribution of individual Attraction Search

Scores in all three experiments. The violet points represent the mean

Attraction Search Score in each experiment and error bars the standard

errors of those means. Attraction Search Scores of zero indicate

information search that is independent of the currently available

evidence. Thus, every data point above zero indicates that an individual

showed a tendency to search for information on the currently attractive

option. Yellow points indicate individuals showing a significant

(p < .050) score at the individual level according to a one-tailed

binomial test. The number of trials required for significance is 6 out

of 6, 12 out of 14, and 14 out of 18 in Experiments 1–3, respectively. |

To test for the Attraction Search Effect, we tested whether the Attraction

Search Score was significantly larger than zero. The mean Attraction Search

Score of subjects was MASS = 0.32 and was significantly larger than

zero, t(302) = 14.55, p < .001, d = 0.84 (see

Figure 3 for the distribution of individual Attraction

Search Scores in all experiments). We also looked at the Attraction Search

Scores per cue-value pattern.5 The Attraction Search Score was also significantly larger than

zero when looking at the three patterns separately, MPattern1 = 0.25,

t(302) = 6.06, d = 0.35, MPattern2 = 0.26, t(302) = 8.29,

d = 0.48, and MPattern3 = 0.46, t(302) = 13.62, d = 0.78, all

ps < .001. Note, however, that comparing the Attraction Search Scores of

the separate patterns required comparing across different scenarios. To

account for this, we also calculated the Attraction Search Scores for each

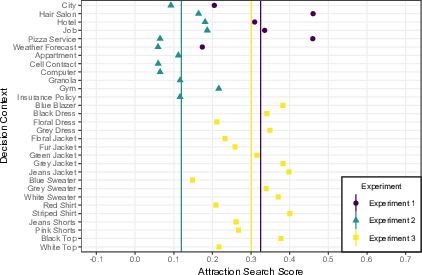

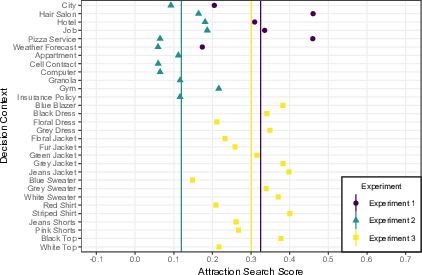

scenario across subjects.6 As shown in Figure 4,

all scenario-wise Attraction Search Scores were above zero; however, there

was substantial heterogeneity in the sizes of the scenario-wise Attraction

Search Scores.

One explanation for the heterogeneity of the Attraction Search Scores on

the scenario level might be that our assumed subjective importance of cues

did not match subjects’ subjective importance. Looking at the subjective

importance ratings, our assumed ordering of cues was mostly matched by the

importance ratings of subjects. Subjects’ mean subjective importance

ratings can be found in Table A1 in the

Appendix 7.2. Substantial differences occurred in the

Hotel scenario, in which subjects considered the last cue as most

important. Further, in the Job and in the City Size

scenarios, subjects considered the second cue as more important than the

first, more so for the City Size scenario.

As the Attraction Search Score aggregated over subjects and content

scenarios, we also ran a generalized linear mixed model analysis to

investigate the variation across these variables. In this model, the

dependent variable was whether subjects searched for Option A in any given

trial. The effect-coded predictor in this model was whether Option A was

attractive in this trial (Version a; +1) or not (Version b; −1). A

significant, positive regression weight for the predictor version

would indicate an information-search pattern consistent with the Attraction

Search Effect. To account for variation in the data, we implemented a

maximum random effects structure with random intercepts for subjects and

content scenarios, as well as random slopes for version.

The results of this generalized linear mixed model showed that subjects

were in general more likely to search for information on Option A given

that this option was attractive, β = 0.75, SE = 0.11, z = 6.77,

p < .001 (see Table B1 and Table B2 for all model

estimates). More precisely, the probability of searching information for

Option A increased from 21.7% in Version b to 55.5% in Version a of the

patterns. The effect of pattern version varied across subjects as well as

content scenarios (see Figure 6). Specifically, the

heterogeneity of the content scenarios matched the one we observed in the

aggregated results.

To check whether we could explain some of the heterogeneity when accounting

for differences due to cue-value patterns, we added a Helmert-coded

cue pattern predictor to the mixed model7 as well as the interaction of

cue pattern and version. The effect of version

remained positive and significant, β = 0.88, SE = 0.13, z = 6.84,

p < .001. Additionally, there was a significant effect that subjects were

less likely to search for Option A when faced with Pattern 2 than when

faced with Pattern 1, β = −0.80, SE = 0.07, z = −10.75,

p < .001. Further, the effect of version on information search

depended on cue pattern, such that the version effect was

the most pronounced for Pattern 3 when comparing it to the other two

cue-value patterns, β = 0.15, SE = 0.04, z = 3.72, p <

.001. There also was a larger effect for Pattern 1 compared to Pattern 2,

β = 0.16, SE = 0.07, z = 2.14, p = .032.

| Figure 4: Distribution of Attraction Search Scores for each decision context in all three experiments. The lines represent the mean Attraction Search Scores across subjects and scenarios in the respective experiments. |

4.3 Discussion

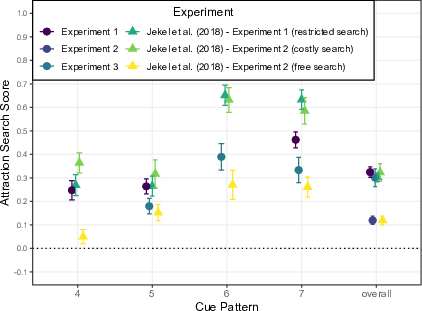

The first experiment shows strong support for the Attraction Search Effect

in semantic contexts different from the hypothetical stock-market game

originally used by (Jekel et al., 2018). Subjects tended to search for

information about the more attractive option in all of the three cue-value

patterns as well as in every content scenario. The effect sizes as well as

the absolute Attraction Search Scores overall and for the separate cue-value

patterns mirror those from (Jekel et al., 2018) in their study without

information search costs (for the Attraction Search Scores in

(Jekel et al., 2018) experiments see

Figure 5).

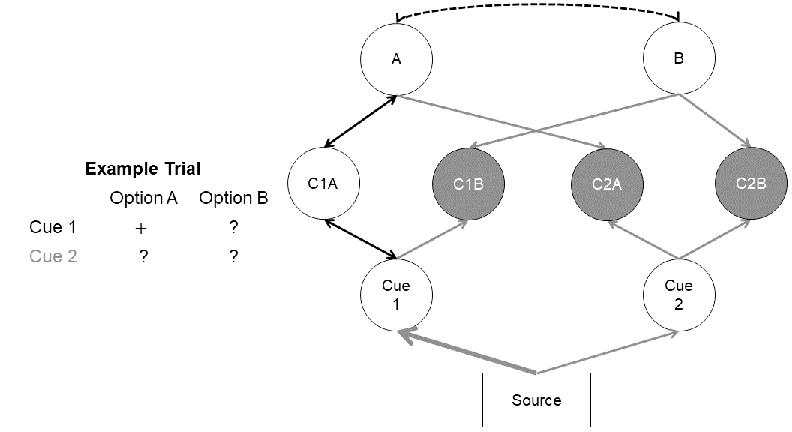

| Figure 5: Mean Attraction Search Scores for

each cue-value pattern and overall from all three experiments in

comparison with the Attraction Search Scores from

(Jekel et al., 2018). The triangles represent the mean

Attraction Search Scores from the first two studies by

(Jekel et al., 2018) for each pattern and overall. Cue-pattern

names on the x axis are the original names from

(Jekel et al., 2018): Patterns 4, 5, and 7 correspond to

Patterns 1, 2, and 3 in Experiment 1, respectively; Patterns 5, 6, and 7

correspond to Patterns 1, 2, and 3 in Experiment 3, respectively. |

| Figure 6: Predicted probabilities of searching for

Option A (Experiment 1 and 3) or of searching for the same option

(Experiment 2) based on random slopes of mixed logistic regression

analyses. The plot under A represents the random slope for the different

decision scenarios in Experiment 1, the plots under B represent the

random slopes for subjects in all three experiments. These plots can be

read as follows: the more negative the slope between Version a and b (or

positive and negative initial valence in Experiment 2, respectively),

the stronger the predicted Attraction Search Effect for this scenario or

subject. |

Our mixed model analyses reveals that the strength of the Attraction Search

Effect differs between individuals as well as semantic contexts. The

differences in effect size for the semantic contexts might be due to the

fact that our assumed subjective importance ordering did not always match

those of subjects. This assumption is supported by the fact that among the

weakest predicted effects for decision context are the City and the Hotel

Scenario.8

Both semantic contexts showed on average a different ordering in subjects’

importance ratings. In sum, we replicated the results from

(Jekel et al., 2018) in a more diverse setting, however, while still

using the cue-value patterns that were specifically designed to elicit the

Attraction Search Effect. Therefore, it is an important next step to show

that the Attraction Search Effect can be found with different cue-value

patterns.

5 Experiment 2: Extension to different cue patterns

In the second experiment, we extended the results from the first experiment

by testing whether the Attraction Search Effect can be found in more

diverse semantic contexts and even without using specifically designed,

highly diagnostic cue patterns. Therefore, we did not present any

information before search and manipulated only the valence of the first cue

value subjects searched for while randomizing the valence of the remaining

cue values. This experiment and the respective hypothesis were

preregistered (Open Science

Framework; Scharf et al., 2017, osf.io/j7vg4).

5.1 Method

5.1.1 Materials

In addition to the six decision scenarios used in the first experiment, we

developed six further decision contents, ranging from renting a new

apartment to deciding on a new gym or to buying a new computer (all

scenarios and cues can be found in Table 2).

We presented a completely closed mouselab matrix to our subjects. In this

matrix, the valences for all but the first opened cue values were randomly

assigned. The valence of the first searched-for cue value was

counterbalanced, to achieve an experimental manipulation of the

attractiveness of options. This manipulation thus ensures that in six of

the twelve trials the first searched-for cue value yielded positive

information (and thus made the first searched-for option attractive)

whereas in the other six trials the first searched-for cue value yielded

negative information (and thus made the first searched-for option

unattractive). It is important to note that it did not matter which

specific piece of information subjects searched for first for this

manipulation to take effect.

To control whether subjects complied with instructions and read the

decision scenarios, we included a decision scenario recognition test. After

subjects completed the decision trials, they were asked to identify on

which topics they had just decided. For this purpose, they were shown

six out of the twelve original decision scenarios and six distractor

scenarios. If they answered more than two scenarios incorrectly, subjects

were excluded from analysis.

| Table 2: Additional content scenarios and cues in Experiment 2. |

| Granola | Gym |

|

|

| Amount of Dietary Fiber | Monthly Pay |

| Number of Calories | Offered Courses |

| Proportion Organic Ingredients | Equipment |

| Proportion Fairtrade Ingredients | Opening Hours |

|

| Computer | Apartment |

|

|

| Price | Proximity to City Center |

| Speed | Sufficient Lighting |

| Design | Square Footage |

| Loudness | Friendliness of Neighbors |

|

| Insurance Company | Cell Contract |

|

|

| Coverage | Monthly Pay |

| Monthly Pay | Network Reception |

| Accessibility in Case of Damage | Number of Free Minutes |

| Customer Friendliness | Data Volume |

| Note. Scenario names are printed in bold font, the four cue names are printed underneath the respective scenario name.

|

5.1.2 Measures

As we did not use the cue-value patterns from the original study by

(Jekel et al., 2018), we computed the individual Attraction Search

Scores as the difference of the probability of switching options

between the first and the second information search across subjects

and scenarios when the initial evidence was negative vs. positive;

Attraction Search Score = p(switching options|initial negative

information) − p(switching options | initial positive information).9 Switching options when the

initially found evidence is negative is consistent with the Attraction

Search Effect, while switching options when the initially found

evidence is positive is inconsistent search behavior. Therefore, as in

the first experiment, if the Attraction Search Score is larger than

zero, subjects show more behavior in line with the Attraction Search

Effect.

5.1.3 Design and procedure

We manipulated the valence of the first clicked-on cue value (positive vs.

negative) within-subjects. As (Jekel et al., 2018) showed that the

Attraction Search Effect is stronger when information search is costly, we

additionally tried to induce a sense of search costs by restricting the

number of possible searches per trial (either three, five, or seven

searches). We opted for restricting information search instead of

implementing explicit search costs, as implementing monetary search costs

is difficult in preferential decision tasks, especially with hypothetical

tasks conducted online. Since the Attraction Search Effect requires

available information to take effect, restricting search to one piece of

information as in the original experiments by (Jekel et al., 2018) is not

possible in a completely closed matrix. In order to restrict information

search and at the same time to avoid subjects immediately opening the fixed

amount of information granted to them, we opted for restricting information

search variably from trial to trial without subjects knowing beforehand how

much information they could open in this specific trial. This way, every

piece of information subjects chose to open during a trial should

rationally be the most informative piece of information they could choose,

as it could be their last piece of information. Therefore, subjects were

not informed about the restriction of search before starting a trial but

were only informed whenever they opened the maximal number of possible

information for the trial. It is important to note that information search

was restricted only in the sense that subjects could not open more

information — they were free to search for less information than the

allowed amount per trial given they opened at least one cue

value.

The order of trials and thus the valence of the first cue value and the

amount of search was randomized for each subject. After following the link

to the online study, subjects first gave their consent for participating in

the study. Following a practice task, subjects started working on the

actual decision trials. Before each trial, subjects were presented with a

brief introduction into the ensuing content scenario. Subjects had to open

one piece of information in every trial. They could then search for either

two, four, or six additional pieces of information; however, they did not

know how many pieces of information they could search for in a specific

trial. When subjects reached the limit of searchable information in a

trial, they were informed that they could no longer search for additional

information and that they had to decide now (for an example trial of the

decision task see Figure 7). After completing all

12 trials, subjects had to work on the recognition task, in which they had

to identify six of the original content scenarios among a list with

additional six distractor scenarios.10 After finishing this task, subjects went

on to provide some demographic details about themselves and then could

decide whether they wanted to receive course credit; participate in the

lottery, in which they could win one of ten 10€-online shop gift

certificates; or neither of these two options. Finally, subjects were

debriefed and thanked for their participation.

| Figure 7: A translated screenshot of the

decision task in Experiment 2. In the current trial, the valence of the

first opened information was negative (2 of 5 dumbbells). Subjects could

search for information by clicking on the empty boxes in the

matrix. Then the respective cue value would appear. Afterwards, they

chose one of the options by clicking on the button around the options. |

5.1.4 Subjects

An a-priori power analysis assuming α = β = .05 for a one-tailed one sample t test and a small Attraction Search Effect with a Cohen’s d = 0.20 yielded a sample size of 272 subjects (Faul et al., 2007). Due to expected dropout, a sample of 300 subjects was aspired to collect. The stopping rule was to either stop data collection after two months or when 300 subjects were collected. The study was programmed with lab.js (Henninger et al., in press) in conjunction with the Multi-Attribute Decision Builder (Shevchenko, 2019). The original sample included 305 completed data sets. From these 305 subjects, eight subjects were excluded because data were not saved for all of the twelve decision trials. Thus, the complete sample included a sample of 297 subjects (230 female, 1 other, Mage = 22.9, SDage = 5.6). Seventeen subjects were excluded because they answered more than two questions incorrectly in the recognition test. After exclusion, a total of 280 subjects remained in the final sample (217 female, 1 other, 84.6% university students). The mean age of the sample was Mage = 22.8 (SDage = 5.6, range 18–63).

5.2 Results

5.2.1 Preregistered analyses

To test whether the Attraction Search Effect emerged in a preferential decision task without specifically designed patterns, we calculated the Attraction Search Score for each subject over all trials. As predicted, the Attraction Search Score was significantly larger than zero MASS = 0.12, t(279) = 6.82, p < .001, Cohen’s d = 0.41. Thus, we found evidence for the Attraction Search Effect in different semantic contexts and closed cue-value patterns.

5.2.2 Additional exploratory analyses

To compare the heterogeneity between decision scenarios to the first

experiment, we also calculated the Attraction Search Scores for each

scenario across subjects. As shown in Figure 4,

all scenario-wise Attraction Search Scores were above zero and there was

less heterogeneity between scenarios compared to Experiment 1.

To account for the multi-level structure of the data and to explore the

heterogeneity between scenarios further, we also ran a generalized linear

mixed model analysis comparable to that in Experiment 1. In this model, the

dependent variable was whether subjects continued to search for the same

option as in their first search in any given trial. The predictor was

whether the valence of the first opened cue value was positive or

negative. Again, a significant, positive regression weight for the

predictor valence would indicate an information-search pattern

consistent with the Attraction Search Effect. To account for variation in

the data, we implemented a model with random intercepts for subjects and

content scenarios as well as a random slope for valence for

subjects.11

The results of this generalized linear mixed model showed that subjects

were in general more likely to stay with the searched-for option when the

first opened cue value was positive, β = 0.38, SE = 0.11,

z = 3.58, p < .001 (see Table B1 and Table B2

for all model estimates). Specifically, the probability of staying with the

searched-for option increased on average from 6.5%, when the first opened

cue value was negative, to 12.9%, when the first cue value was

positive. The results for the random effects showed considerable variance

of the effect of valence between subjects (see

Figure 6).

Looking at the distribution of the Attraction Search Score values in

Figure 3 and the heterogeneity of the individual effects

in the mixed model, it was apparent that there is a large proportion of

subjects that did not show the Attraction Search effect. In fact, the

median of the overall Attraction Search Score distribution was

MdASS = 0. One difference between subjects with an Attraction Search

Score of zero and subjects with a non-zero Attraction Search Score was the

amount of searched cue values. Subjects with an Attraction Search Score of

zero tended to search for more cue values, MASS=0 = 4.72, than

subjects with a non-zero Attraction Search Score, MASS≠0 = 4.57,

t(277.09) = −2.61, p = .010, Hedge’s g = −0.31. Additionally, we

found that subjects with higher individual Attraction Search Scores tended

to take longer to open the first cue value, r(278) = .341, p < .001.

To further investigate subjects who had an Attraction Search Score of zero,

we hypothesized that some subjects used predetermined, fixed search

strategies. To test this assumption, we formulated three different search

strategies: strictly cue-wise, lenient cue-wise, and strictly option-wise

information search.12 The strictly cue-wise search was

defined as subjects starting to search for information on one option’s

side, continuing their search on the same cue on the other option’s side,

and then returning to the first option’s side for the ensuing search and so

on. The lenient cue-wise search also was defined as always searching for

two pieces of information from the same cue consecutively but did not

require to always start the search on the same option. The strictly

option-wise search was defined as searching information on one option until

all information for this option was acquired and then switching to the

other option. On average, subjects used a strictly cue-wise search strategy

in 39.1% (SD = 25.0), a lenient cue-wise search strategy in 23.7%

(SD = 17.9), and an option-wise search strategy in 7.1% (SD = 14.2) of

trials. In 30.1% (SD = 23.4) of trials, subjects’ information-search

pattern could not be classified as belonging to one of the aforementioned

strategies. Thus, in over half of all trials some kind of fixed cue-wise

search strategy was used.

In order to test whether the occurrence of Attraction Search Scores of zero

could be explained by subjects using predetermined search strategies, we

correlated the individual Attraction Search Scores with the number of

trials of each subject belonging to one of formulated search

strategies. Indeed, the correlation of individual Attraction Search Scores

and the number of trials in which subjects searched strictly cue-wise was

negative, r = −.31, n = 280, p < .001; indicating that subjects who

searched for information strictly cue-wise in more trials had lower

Attraction Search Scores. The results were similar for the lenient cue-wise

strategy for which the correlation was negative as well, r = −.16,

n = 280, p = .008. For the number of trials searched following an

option-wise strategy, we found a positive correlation, r = .28,

n = 280, p < .001. The correlation between the number of unclassified

trials per subject and the individual Attraction Search Scores was also

positive, r = .28, n = 280, p < .001. Therefore, subjects with a low

Attraction Search Score had a stronger tendency to search for information

consistent with a pre-determined, cue-wise search strategy.

To analyze the influence of strategies on the trial level, we ran the same

mixed logistic regression as described above and added the count of trials

following any of the above-mentioned strategies as a

predictor.13 In this model, the probability of searching for the

same option was 12.6% when finding initial positive evidence compared to

6.2% when finding initial negative evidence, β = 0.38, SE = 0.11,

z = 3.63, p < .001 (see Table B1 and Table B2

for all model estimates). Additionally, the more trials in which a subject

showed information-search behavior that followed a specific strategy the

less likely she was to continue to search for the same option,

β = −0.41, SE = 0.04, z = −9.99, p < .001. The number of trials

following a search strategy also influenced the strength of the effect of

the first opened cue value, β = −0.09, SE = 0.03, z = −2.71,

p = .007. This interaction took the effect that if no strategy was used

in any trial, the predicted probability of searching for the same option

when the initial information was positive was 90.4% compared to 51.0%

when the initial information was negative. On the other hand, when an

information search strategy was used in every trial, the predicted

probability of searching for the same option was 2.3% when the initial

information was positive and 2.0% when the initial information was

negative. Note that the overall effect of searching with a strategy

was negative because cue-wise search strategies, which had a negative

effect on the Attraction Search Score, were much more common (in total

62.8% of trials) than option-wise search strategies (7.1% of trials),

which had a positive effect on the Attraction Search Score.

5.3 Discussion

In the second experiment, we took a step further away from the original

setup of (Jekel et al., 2018) by extending the range of semantic contexts

and using closed cue-value patterns with randomized cue values. The results

show that the Attraction Search Effect emerges under these conditions as

well and, thus, does not appear only when using highly diagnostic cue-value

patterns. Further, in contrast to the first experiment, the effect of the

valence manipulation did not differ between decision contexts and there

were systematic differences only in how likely subjects were to continue to

search for the same option in different contexts. The systematic

differences in the valence effect between different scenarios might be

absent because in this experiment the prediction of the Attraction Search

Effect did not require the subjects to have the correct subjective

importance ordering. Rather, we assumed that the first opened cue is likely

to be the most valid cue.

We did observe a considerable drop in effect size in the second experiment

compared to the first. This drop is due to a large number of subjects who

had an Attraction Search Score of zero. This finding is also supported by

the large variability due to subjects in the mixed model analysis. The

heterogeneity can partly be explained by looking at subjects’ search

behavior: Subjects with Attraction Search Scores of zero tended to search

for more information. Additionally, subjects with lower Attraction Search

Scores tended to open the first cue value faster and searched for

information in a cue-wise fashion in more trials. The results of the mixed

logistic regression corroborate these findings by showing that the

Attraction Search Effect is weakened the more subjects followed specific

information search strategies on the trial level. Taken together, these

exploratory results show similarities to Jekel et al.’s (2018) results in

the condition without search costs. (Jekel et al., 2018) showed that

subjects searched for more information faster and that individual

Attraction Search Scores were considerably reduced when no information

search costs were implemented. Thus, the results of Experiment 2 indicate

that the restriction of search might not have been strong enough to induce

a sense of search costs.

Besides the aforementioned limitations, we still found a medium-sized

Attraction Search Effect in an experiment that did not rely on a specific

semantic context or specifically designed cue-value patterns. Thus, the

results of this experiment emphasize the overall robustness of the effect

and the range of applicability of iCodes.

| Table 3: Version a and Version b of cue patterns used in Experiment 3. |

| | Pattern 1 | Pattern 2 | Pattern 3 |

|

| | A | B | A | B | A | B |

|

Cue 1 | +(−) | ? | +(−) | −(+) | +(−) | −(+) |

Cue 2 | +(−) | ? | ? | ? | ? | ? |

Cue 3 | ? | ? | + | − | + | − |

Cue 4 | ? | ? | − | + | − | ? |

| Note. + = positive cue value, − = negative cue value, ? = hidden, searchable cue value; Version a of patterns is displayed, cue values in parentheses are from Version b. Patterns 1, 2, and 3 correspond to Patterns 5, 6, and 7, respectively, in (Jekel et al., 2018). |

6 Experiment 3

Experiment 3 varied another aspect of the decision task that has been kept

constant in Jekel et al.’s (2018) studies and in our studies so far: the

way in which information is presented. Until now, every experiment testing

the predictions of iCodes has used the matrix presentation of the classic

mouselab task. It has been shown that the way information is presented

influences information-search behavior

(BettmanKakkar, 1977,Ettlin et al., 2015). Presenting information in a

matrix organizes the information for the decision maker and this

organization in turn influences search behavior

(SchkadeKleinmuntz, 1994). Thus, in this experiment we test whether

the Attraction Search Effect still emerges in a quasi-realistic online shop

setting. The subjects’ task in this experiment was to imagine being a buyer

for an online clothing shop and to buy clothes online. In addition, as the

two previous experiments were both run in German and with German samples,

we decided to collect data from a different, non-German subject pool via

the platform Prolific (PalanSchitter, 2018). This

experiment and our hypothesis were preregistered (Open Science

Framework; Scharf et al., 2018, osf.io/nfruq).

6.1 Method

6.1.1 Materials

Cue patterns.

As in Experiment 1, we again used a subset of

the original cue-value patterns from (Jekel et al., 2018). As described

above, each pattern has two versions that differ in which option is

currently more attractive. For this experiment, we selected three from the

original eight patterns, displayed Table 3. Pattern 2 and

Pattern 3 were chosen because they elicited the strongest and the second

strongest Attraction Search Effect in the original studies. Pattern 1,

which elicited the fourth strongest Attraction Search Effect in the

original studies, was chosen to include a pattern that showed a strong

effect but at the same time has more than three searchable cue

values. Thus, the addition of Pattern 1 was supposed to increase the

variability between patterns. Each pattern in both versions was presented

three times, leading to a total number of 18 trials per subject.

Shop items.

We used images of 18 different items of clothing

for this experiment. These articles of clothing were each described by

customer ratings on four attributes. Subjects were told that these

attributes differed in their relative importance for the online shop they

are shopping for. The attributes in the order of their importance were the

fit of the clothes, the comfort of the fabric, the availability of sizes,

and the ease of care. The customer ratings were dichotomized, such that a

negative overall rating of one of the attributes was described by two stars

and a positive overall rating was described by five stars. To increase the

realism of the online shop, each item was assigned a fictional brand name

(four-letter pseudowords adapted from StarkMcClelland, 2000) and a

fictional brand logo. In each trial, subjects had to decide between the

same article of clothing that differed in their brands and the customer

ratings of their attributes only. An example trial is displayed in

Figure 8.

| Figure 8: A screenshot of the decision task in

Experiment 3. The current cue-value pattern is Pattern 3 in Version

b. Subjects could search for information by clicking on the number under

the cue name. The number indicated the importance of the cue for the

decision, with "1" representing the most important attribute and "4"

representing the least important attribute. Then the respective cue

value would appear. Afterwards, they chose one of the options by

clicking on its "Add to cart" button. |

6.1.2 Measures

Just as in Experiment 1, we computed the individual Attraction Search

Scores as the difference of the probability of searching for Option A in

the nine trials of Version a vs. of Version b across articles of clothing,

Attraction Search Score = p(Searching Option A | Version a)

− p(Searching Option A | Version b).14

6.1.3 Design and procedure

All subjects were presented with all cue-value patterns in both versions

and all shop items in a total of 18 trials (3 cue-value patterns x 2

pattern versions x 3 repetitions). Note that the cue patterns were repeated

but not the items of clothing. The order of trials as well as the

combination of cue-value patterns, shop items, logos, and brand names were

randomized for each subject. We further balanced presentation of the

cue-value patterns for the repetitions, such that Option A of each pattern

was once on the left side, once on the right side, and assigned to a side

randomly for the third repetition. The online experiment was programmed in

lab.js (Henninger et al., in press) and run via the platform

Prolific (PalanSchitter, 2018). Subjects received £1.10 for their participation. Before working on the actual task, subjects

agreed to an informed consent form and read the instructions for the

task.

Subjects were asked to imagine that they work as a buyer for an online

clothing shop and that their task was to choose 18 different articles of

clothing in order to restock their employer’s warehouse. We included three

questions about the instructions that had to be answered correctly before

the subjects could continue with the actual task. The number of repetitions

it took to answer these questions correctly were used as an exclusion

criterion, such that when subjects had to repeat these questions more then

once they were excluded from analysis. During the task, subjects were

allowed to search one additional piece of information, after which they had

to decide which article of clothing they wanted to buy. Before finishing

the study, subjects were asked to provide some demographic information and

were then thanked for their participation.

6.1.4 Subjects

In a student project conducted to pretest the materials, we found an

Attraction Search Effect with an effect size of Cohen’s d = 1.34 with

N = 312. As the current experiment was run in a non-German and likely

more diverse sample, we decided to be rather conservative for our

sample-size rationale. A sensitivity analysis revealed that we could find

an effect of Cohen’s d = 0.33 for a one-sided one-sample t-test with an

α = β = .05 and a sample of N = 100 subjects. As we expected

some experimental mortality due to the fact that this experiment was run

online, we aimed to collect 10% more than the needed sample, which

resulted in a total sample size of 110 subjects. We collected data of

N = 110 subjects, from which N = 99 were complete data sets (48 female,

1 other, Mage = 31.3, SDage = 10.0). Ten subjects were excluded

because they had to repeat the instruction check two or more times which

resulted in a final sample of N = 89 (44 female, 1 other, 16.9%

university students). The mean age of the sample was Mage = 31.3

(SDage = 10.0, Range 18–60). All but one subject indicated that they

were native English speakers.

6.2 Results

6.2.1 Preregistered analyses

Just as in the first and the second experiment, we hypothesized that the

average Attraction Search Score is significantly larger than zero. In order

to test this hypothesis, we calculated the individual Attraction Search

Scores for all subjects. The mean Attraction Search Score was

MASS = 0.30, t(88) = 7.92, p < .001, Cohen’s d =

0.84. Therefore, we found evidence for subjects’ search behavior being

consistent with iCodes’s predictions even when the cue-value information

was not presented in a matrix.

6.2.2 Exploratory analyses

As a first exploratory analysis, we tested whether we could find an

Attraction Search Score larger than zero when looking at the three patterns

separately.15 Each pattern yielded a significantly positive Attraction Search

Score, MPattern1 = 0.18, t(88) = 5.47, d = 0.58,

MPattern2 = 0.39, t(88) = 6.87, d = 0.73, and

MPattern3 = 0.33, t(88) = 6.23, d = 0.66, all p < .001. We also

calculated the Attraction Search Scores for each article of clothing, which

can be found in Figure 4. The heterogeneity

between items of clothing seemed to be more pronounced than in Experiment 2

but somewhat less pronounced than in Experiment 1.

We also ran a generalized linear mixed model for Experiment 3. Just as in

Experiment 1, the dependent variable was whether subjects searched for

Option A in any given trial and the effect-coded predictor was whether

Option A was attractive in that trial (Version a; +1) or not (Version b;

−1). To account for variation in the data, we added random intercepts for

subjects and content scenarios as well as a random slope for

version for subjects.16

The results showed that subjects were on average more likely to search for

information on Option A given that this option was attractive,

β = 0.76, SE = 0.10, z = 7.18, p < .001 (see

Table B1 and Table B2 for all model

estimates). Specifically, the probability of searching information for

Option A increased from 18.5% in Version b of the pattern to 51.0% in

Version a of the pattern. At the same time, the effect of pattern version

varied across subjects systematically, as shown in

Figure 6.

To try to explain some of the inter-individual variance in the effect, we

added the Helmert-coded cue pattern predictor17 to the

model. The effect of version was still significantly positive in

this model, β = 0.91, SE = 0.14, z = 6.60, p < .001, indicating

that the probability of searching for Option A increased from 14.3% in

Version b to 50.8% in Version a. There were also significant effects for

both pattern predictors, indicating that subjects were more likely to

search for Option A in Pattern 2 compared to Pattern 1, β = 1.36,

SE = 0.11, z = 12.96, p < .001, as well as in Pattern 3 compared to

Pattern 1 and 2, β = 0.18, SE = 0.05, z = 3.81, p <

.001. However, there was no significant interaction between the

cue pattern and the version predictors, ps > .100.

6.3 Discussion

The results of Experiment 3 show that the Attraction Search Effect is not

restricted to a matrix presentation format but can also be found in a more

realistic, less restrictive setting. The effect sizes of the separate cue

patterns as well as the absolute Attraction Search Scores are comparable to

those of (Jekel et al., 2018) in the condition without search costs (see

Figure 5), as all three patterns show a medium

to large effect. The results plotted in Figure 3 further

show that, albeit not restricted to the original cue-value patterns, the

effect is more pronounced with the original cue-value patterns, when

comparing the results of Experiment 2 with Experiment 3. We do not find the

same level of heterogeneity between decision contexts in Experiment 3

compared to the first experiment (see

Figure 4). This might be explained by the fact

that the decision content is more homogeneous in Experiment 3 compared to

Experiment 1 because all decisions were made between articles of

clothing. There is also no evidence in the results of Experiment 3 for the

same interaction of the cue patterns and the cue pattern version that was

found in Experiment 1. The absent interaction is probably due to two

reasons: first, the original effect sizes in (Jekel et al., 2018) of the

cue patterns used in Experiment 3 were more homogenous from the start when

compared to the cue patterns from Experiment 1. Second, the interaction between

subjective importance of cues and option attractiveness was reduced in

Experiment 3 as the ordering of the cues’ importance was given at the start

of the experiment.

7 General discussion

The Attraction Search Effect is the core prediction by iCodes that states

that information search is influenced not only by the validity of the

information but also by the attractiveness of the

options. (Jekel et al., 2018) provided first evidence for this prediction

in three experiments that all shared the same task characteristics and the

same semantic content. The goal of the current project was to test the

range of applicability of iCodes’s search predictions. For this purpose, we

ran three conceptual replications of the original studies that varied

aspects that were kept constant in the original experiments. In the first

experiment, we showed that the Attraction Search Effect is not restricted

to the probabilistic-inference tasks in Jekel et al.’s (2018) experiments

but also emerges in preferential decision tasks in six every-day content

domains. The results of the second experiment, which was preregistered,

illustrate that the Attraction Search Effect generalizes to a wider range

of different semantic contexts and further show that the Attraction Search

Effect also emerges without specifically designed and diagnostic cue-value

patterns, albeit with a somewhat reduced effect size. In the last

experiment, also preregistered, we found evidence that the Attraction

Search Effect is also present when one moves away from the classic matrix

format of information presentation to a more realistic simulated

online-shop setting. Thus, we found evidence for iCodes’s

information-search prediction in three experiments with in total 627

subjects. These results show that the influence of the already available

information on information-search direction is a robust phenomenon that can

be found in different variants of the classic multi-attribute decision

task. They further strengthen iCodes as a general theory of decision making

and information search.

7.1 Limitations and future directions

The results of Experiment 2 show that there are boundary conditions for the

generalizability of the Attraction Search Effect. As the second experiment

was the only experiment that did not use the cue-value patterns from

(Jekel et al., 2018) and did not restrict information search to one piece

of information, it is likely that the reduced effect size in Experiment 2

was at least partially caused by the change in the experimental setup. The

change from specifically designed, diagnostic cue-value patterns to

randomized cue-value patterns naturally weakens the effect of the

experimental manipulation, as the reduced experimental control due to the

randomization of cue values may have increased the noise in the data. The

second aspect that was different in Experiment 2 compared to the two other

experiments was that search was less restrictive. The original results by

(Jekel et al., 2018) showed that costly or restricted search is relevant

for the strength of the Attraction Search Effect. It is possible that the

restriction of search, that varies from trial to trial, we used to

implement search costs was not strong enough to elicit a reliable

Attraction Search Effect for many subjects who instead opted for a

heuristic search strategy. This assumption is supported by the fact that

subjects that showed no Attraction Search Effect tended to search for more

information and did so faster than subjects that did show the Attraction

Search Effect in this experiment, just like subjects in the condition

without search costs in (Jekel et al., 2018). In fact, individual

Attraction Search Scores tended to be lower for subjects that used cue-wise

search strategies more often and higher for subjects whose search behavior