| Figure 1: A sample stimulus used in Studies 1 and 2. Participants estimated which type of character was the most frequent on the screen, as well as the total number of characters presented. |

Judgment and Decision Making, vol. 8, no. 3, May 2013, pp. 188-201

The role of actively open-minded thinking in information acquisition, accuracy, and calibrationUriel Haran* Ilana Ritov# Barbara A. Mellers% |

Errors in estimating and forecasting often result from the failure to collect and consider enough relevant information. We examine whether attributes associated with persistence in information acquisition can predict performance in an estimation task. We focus on actively open-minded thinking (AOT), need for cognition, grit, and the tendency to maximize or satisfice when making decisions. In three studies, participants made estimates and predictions of uncertain quantities, with varying levels of control over the amount of information they could collect before estimating. Only AOT predicted performance. This relationship was mediated by information acquisition: AOT predicted the tendency to collect information, and information acquisition predicted performance. To the extent that available information is predictive of future outcomes, actively open-minded thinkers are more likely than others to make accurate forecasts.

Keywords: forecasting, prediction, overconfidence, calibration, individual differences, actively open-minded thinking.

Research in disciplines such as meteorology, statistics, finance, and psychology has tried to measure and explain the relationship between people’s confidence in their predictions and the accuracy of those predictions (e.g., Gigerenzer, Hoffrage, & Kleinbölting, 1991; Harvey, 1997; Henrion & Fischhoff, 1986; Klayman, Soll, González-Vallejo, & Barlas, 1999). Overconfidence in the accuracy of one’s estimates—sometimes called overprecision, to distinguish it from other types of overconfidence (Moore & Healy, 2008)—refers to the discrepancy between the confidence people have in the accuracy of their estimates, predictions, or beliefs and actual accuracy rate. Overconfidence has proven to be robust and difficult to remedy, although some interventions have been partially successful (Haran, Moore, & Morewedge, 2010; Soll & Klayman, 2004; Speirs-Bridge et al., 2010). In this work, we examine cognitive styles and personality dimensions that might be related to performance, and seek an explanation for how they work.

Most studies attribute confidence-accuracy miscalibration to one of two shortcomings. The first is the under-appreciation of uncertainty and sources of error (e.g., Erev, Wallsten, & Budescu, 1994; Gigerenzer et al., 1991; Soll, 1996). Specifically, Juslin, Winman, and Hansson (2007) argued that judges make two errors in transforming samples of information into an estimate: they perceive the sample as an exact, unbiased representation of the estimated population; and they fail to acknowledge that sample variances are smaller than population variances. As a consequence, their estimates often miss the mark.

The second shortcoming is the tendency to focus on the first answer that comes to mind, while failing to properly consider alternative outcomes (e.g., McKenzie, 1998). This failure to consider alternatives may come in the form of an incomplete search for relevant information, failure to retrieve available information from memory, or underweighting the importance or validity of information inconsistent with one’s initial hypothesis. The estimation process begins with a search in memory for relevant information to provide a tentative answer. This tentative answer, once reached, biases the search and retrieval of new information, as well as the interpretation of ambiguous evidence, in favor of the initial conclusion (e.g., Hoch, 1985; Koriat, Lichtenstein, & Fischhoff, 1980).

Building on this conceptualization, researchers have tried to improve confidence-accuracy calibration by encouraging judges to direct more attention to alternative evidence and other possible answers. Fischhoff and Bar Hillel (1984) instructed participants to look at the problems they were solving from different perspectives. Others (Hirt & Markman, 1995; Morgan & Keith, 2008) asked forecasters to project multiple scenarios, rather than imagine the one they deemed most probable. McKenzie (1997) explicitly told participants to take the alternative into account before making an estimate, whereas Koriat et al. (1980) instructed judges to generate self-contradicting arguments. These studies have reported modest success in reducing the discrepancy between the confidence judges displayed in their estimates and their accuracy, not by increasing accuracy, but by reducing confidence.

Infinite search for, and consideration of information prior to an estimate will result in the most informed estimate possible. These procedures, however, are costly in time and effort, and their utility—the likelihood that the estimate based on them will be accurate—increases at a diminishing rate (Hertwig & Pleskac, 2010). According to some (e.g., Dijksterhuis, Bos, Nordgren, & Van Baaren, 2006; Gigerenzer & Gaissmaier, 2011), effortful search and information processing may even decrease accuracy. Judges, then, should be cognizant of an optimal point at which they should stop their efforts, in order to increase accuracy on the one hand, and avoid waste of resources on the other (Baron, Badgio, & Ritov, 1991; Browne & Pitts, 2004; Juslin & Olsson, 1997). While we agree that too much processing can hinder efficient decision making, people rarely “overthink” before making an estimate or forecast, and they have never been criticized for drawing conclusions from too large a sample. While proposed strategies for effective judgment vary greatly and not all prescribe more search and deliberation, we seek to identify the characteristics of persistent judges who acquire more information before estimating. We acknowledge that these individuals might not always make superior forecasts than those who collect less information. Therefore, our studies measure not only information acquisition but also accuracy of estimations based on the acquired information.

Previous research has documented stable individual differences in calibration (e.g., Klayman et al., 1999; Wolfe & Grosch, 1990). For example, some evidence indicates that men are more overconfident in their estimates than are women (Barber & Odean, 2001). Calibration is also related to expertise (Koehler, Brenner, & Griffin, 2002), though not in every estimate format (McKenzie, Liersch, & Yaniv, 2008). Surprisingly, not many relationships have been found between accurate estimations and personality attributes. Extraversion correlates negatively with accuracy and calibration on various cognitive and estimation tasks (Lynn, 1961; Schaefer, Williams, Goodie, & Campbell, 2004; Taylor & McFatter, 2003), but positively with short-term recall (Howarth & Eysenck, 1968; Osborne, 1972). McElroy and Dowd (2007) found that openness to experience was related to greater susceptibility to the anchoring bias. Finally, overconfidence has been linked to proactiveness (Pallier et al., 2002), narcissism (Campbell, Goodie, & Foster, 2004), self-monitoring (Cutler & Wolfe, 1989), and trait optimism (Buehler & Griffin, 2003).

Researchers have established a stronger link between cognitive style and estimation performance. For example, McElroy and Seta (2003) found that an analytic and systematic processing style correlated with reduced susceptibility to framing effects. Baron, Badgio, and Gaskins (1986) assessed reflection/impulsivity in students, a dimension that corresponds to the speed vs. accuracy tradeoff in problem-solving. Those who are more reflective take more time to reason before acting and deciding, a tendency found to be related to better performance (i.e., a lower error rate, Kagan, 1965; Messer, 1970; Weiss Barstis & Ford, 1977). In this paper, we examine four dimensions of cognitive styles and their influence on the accuracy of estimations.

Actively open-minded thinking. Going beyond the reflection/impulsivity construct, Baron (1993) developed a reasoning style called actively open-minded thinking (AOT). This style of thinking includes the tendency to weigh new evidence against a favored belief, to spend sufficient time on a problem before giving up, and to consider carefully the opinions of others in forming one’s own. Research by Stanovich, West, and others (Macpherson & Stanovich, 2007; Sa, West, & Stanovich, 1999; Stanovich & West, 1998) found that AOT was related to a reduced susceptibility to belief bias—the inability to decouple prior knowledge from reasoning processes. This relative immunity to over-reliance on prior beliefs might increase actively open-minded thinkers’ desire to be more informed before making an estimate or prediction, and their higher attention to information already acquired may further improve their estimation performance. Items of the Actively Open-minded Thinking Scale are provided in the Appendix.1

Need for cognition. This cognitive style refers to the tendency to engage in and enjoy effortful cognitive endeavors (Cacioppo, Petty, & Kao, 1984). Cohen (1957) argued that individuals with a high need for cognition were more likely to organize, elaborate on, and evaluate information. Cacioppo and Petty (1982) found that this attribute predicted attitudes toward simple cognitive tasks, relative to complex ones. Individuals with low need for cognition enjoyed easier tasks, whereas those with high need for cognition enjoyed more difficult tasks. Kardash and Scholes (1996) found a relationship between need for cognition and the tendency to properly draw inconclusive inferences from mixed evidence. People with high need for cognition were less likely to jump to a conclusion when the evidence did not warrant it. Finally, Blais, Thompson, and Baranski (2005) found a positive relationship between need for cognition and accuracy in judgment.

Grit. Duckworth, Peterson, Matthews, and Kelly (2007) developed the construct of grit as a complement to intelligence in predicting success in academic contexts. They defined grit as perseverance and passion for long-term goals. This trait includes the exertion of vigorous effort to overcome challenges and maintain effort in the face of failure and adversity. The authors found that, while grit did not correlate positively with IQ, it accounted for some of the variance in successful outcomes of academics and professionals.

Maximizing vs. satisficing. Maximizing behavior is aimed at achieving the highest expected utility (Simon, 1978). In choice, those who maximize look for the best option, as opposed to those who satisfice, or choose an alternative that is “good enough”. Satisficing is often linked to the use of heuristics in judgment and decision processes and is assumed to be more prone to bias. Surprisingly, several studies have found the opposite pattern—that maximizers report more frequent engagement in spontaneous decision making (Parker, Bruine de Bruin, & Fischhoff, 2007) and display both lower accuracy and greater overconfidence than do satisficers in prediction tasks (Jain, Bearden, & Filipowicz, 2013). These studies have tended to focus on judgment outcomes and not on the process by which judgments are formed. Therefore, we included this measure to test whether maximizers look for more information than satisficers before deciding that they are sufficiently informed to make an estimate.

These four attributes—AOT, need for cognition, grit, and maximizing—are conceptually distinct. Actively open-minded thinking refers to the consideration of evidence prior to making a decision. Thus, we expected it to be the most predictive of information acquisition in our studies. Need for cognition is a general trait that reflects the desire to think and exert mental effort. Grit and maximizing are even broader constructs, in the sense that they are not limited to thinking tasks. Despite these conceptual differences, all four variables may predict the willingness to spend more time and effort in making an informed prediction.

We conducted three studies to examine the relationships between these attributes, persistence in information acquisition, and performance in an estimation task. We measured individual attributes and elicited estimates in both categorical and quantitative formats. We either measured or manipulated the amount of information participants obtained prior to estimation. All three studies were conducted online. Participants were recruited through Amazon.com Mechanical Turk (see Krantz & Dalal, 2000; Paolacci, Chandler, & Ipeirotis, 2010 for reviews of this participant pool and online data collection in general).

Participants made a series of categorical and quantitative estimates. We measured the four individual difference variables mentioned earlier, as well as the amount of information participants acquired prior to each estimate. The goal was to examine whether the propensity to acquire more information, as well as subsequent performance, could be predicted by any or all of the four thinking-style attributes.

One-hundred eighty three U.S. based participants (97 females, Mean age = 35.28) completed an online survey in exchange for $0.50 each. The study consisted of two parts. The first part included four perception tasks, presented in an order chosen at random for each participant. In each task, participants saw a number of objects of different types (i.e., 47 balls of four different colors, 25 emoticons of three different expressions, 42 mathematical characters of four types, 30 objects of four different shapes; see Figure 1 for an example). The objects were presented at random places on a computer screen for four seconds at a time. Participants then estimated which object type appeared most frequently, rated their confidence in the accuracy of this estimate, and provided an 80% confidence interval for the total number of objects on the screen.

Figure 1: A sample stimulus used in Studies 1 and 2. Participants estimated which type of character was the most frequent on the screen, as well as the total number of characters presented.

Participants were permitted to view the objects as many times as they wanted. Each time, the objects appeared in a different random order for four seconds. After each presentation, participants decided whether to view the objects again or make an estimate. Persistence of information acquisition was measured by the number of times participants chose to view the objects.

After completing the four tasks, participants answered questions about cognitive styles and personality dimensions. These included, in an order chosen at random for each participant, the Actively Open-minded Thinking scale (See Appendix), need for cognition (Cacioppo et al., 1984), the Short Grit Scale (grit-s; Duckworth & Quinn, 2009), the Maximization Scale (Nenkov, Morrin, Ward, Hulland, & Schwartz, 2008), the Big 5 personality dimensions (Gosling, Rentfrow, & Swann, 2003), worry (Van Rijsoort, Emmelkamp, & Vervaeke, 1999), and the Cognitive Reflection Test (Frederick, 2005).

Table 1: Correlations among the four cognitive style dimensions and other individual attributes measured in Study 1.

AOT Need for cognition Grit Maximizing AOT Need for cognition .355 *** Grit -.078 .276 *** Maximizing -.096 .150 * -.055 Agea .212 ** .022 .165 * -.204 ** Level of education .053 .125 .190 * .127 Cognitive reflection .300 *** .304 *** -.024 .067 a Log(10) transformed. * p < .05, ** p < .01, *** p < .001.

Participants viewed the objects an average of 5.70 (SD = 3.99) times. They achieved 2.87 (SD = 0.96) correct choices in four tasks, or a 71.86% success rate. Participants reported 69.04% confidence, on average, in the accuracy of their choices in each task, which did not differ significantly from their success rate, t(182) = −1.45, p = .15. Participants were overconfident in their estimates of the total number of objects presented. Their 80% confidence intervals for the total number of objects included the actual numbers in only 2.08 of the 4 trials (SD = 1.35), achieving a success rate of 52.05%, significantly lower than 80%, t(182) = 11.17, p < .001, d = 0.83.

The number of times participants chose to view the objects was a highly skewed distribution, therefore we used a log(10) transformation of information acquisition. This variable was related to accuracy in estimates of the most frequent item type, r = .415, p < .001 as well as in the confidence intervals for the total number of objects on the screen, r = .311, p < .001, although no relationship with was found with the width (log transformed) of these confidence intervals, r = −.071, p = .34. More informed participants also felt more confident about the accuracy of their choices, r = .246, p = .001, and the calibration of these confidence assessments (measured by the squared difference between confidence and accuracy) improved with the amount of information participants collected prior to their estimates, r = −.172, p = .02.

Table 1 summarizes the correlations among the scores on the four cognitive style measures. Multiple stepwise regression analyses revealed that AOT was the best predictor among the four variables, and the only variable that predicted both information acquisition and performance. Participants who scored higher on the AOT scale made more accurate estimates of the most frequent object type and their confidence intervals for the total number of objects included the correct answer more often. Scores on the AOT scale correlated positively with openness to experience, r = .229, p = .002, and need for cognition, r = .355, p < .001, as well as with performance on the Cognitive Reflection Test, r = .300, p < .001. However, none of these other measures or any other measures in the study was significantly related to performance on the experimental tasks (see Table 2).

Table 2: Results from stepwise regression analyses predicting information acquisition, correct choices and accurate confidence intervals in Study 1. Unstandardized regression coefficients are reported, with standard errors in parentheses.

Information acquisition Correct choices Variablea Model 1 Model 2 Model 3 Model 1 Model 2 Model 1 Constant 0.238^† 0.017 -0.669** 1.908*** 1.531** -0.113 (0.12) (0.16) (0.22) (0.40) (0.44) (0.56) AOT 0.087** 0.091*** 0.066** 0.198* 0.193* 0.451*** (0.03) (0.03) (0.03) (0.08) (0.08) (0.11) Grit 0.006* 0.004 (0.003) (0.003) Age b 0.579*** (0.13) Level of 0.102* education (0.05) R2 .063** .085*** .174*** .032* .054** .081*** Δ R2 .023* .088*** .022* F 12.08 8.41 12.55 5.92 5.14 16.05 F for Δ R2 4.51 19.12 4.25 a Need for cognition and maximizing were not included in any significant model. Among the other variables, only variables included in any significant model are presented. b log(10) transformed † p < .1, * p < .05, ** p < .01, *** p < .001.

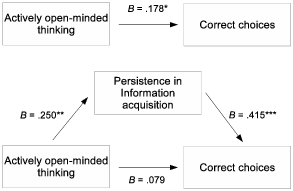

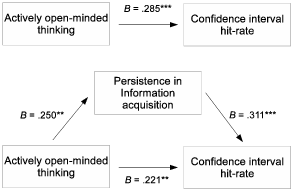

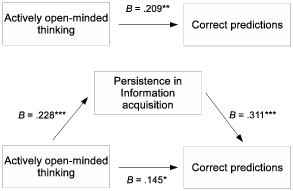

We conducted a mediation analysis (Baron & Kenny, 1986) to test whether persistence of information acquisition mediated the relationship between AOT and performance. As Figures 2 and 3 show, persistence of information acquisition mediated the relationship between AOT and performance, both in choosing the most frequent object type (full mediation) and in accurate confidence intervals for the total number of objects (partial mediation). This suggests that high AOT individuals were more accurate because of their willingness to view objects more often before making their estimates. These results persisted even after controlling for age and CRT score.

Figure 2: Results of mediation analysis for persistence in search for information in the relationship between AOT and choice accuracy in Study 1. Standardized coefficients are presented. * p < .05; ** p < .01; *** p < .001.

Figure 3: Results of mediation analysis for persistence in search for information in the relationship between AOT and confidence interval hit-rate in Study 1. Standardized coefficients are presented. ** p < .01; *** p < .001.

We expected to find a positive relationship between information acquisition and AOT. But the construct, AOT, is also related to the consideration and processing of existing information. Similarly, estimate performance depends not only on the acquisition of relevant information, but also on the effective processing of this information. The mediation analyses suggest that in this study, AOT worked by enhancing the former process: controlling for persistence in information acquisition weakened the relationship between AOT and performance. However, the task employed in this study cannot distinguish between information acquisition and information processing. Therefore, in Study 2, we constrained participants’ ability to collect more evidence and measured their performance given a fixed amount of pre-estimate information.

Study 1 demonstrated that AOT influences performance and is associated with a more persistent acquisition of information. In this study we sought to test whether there are other ways by which high AOT individuals achieve better performance, that are not related to information acquisition. Note that the items used to measure AOT are at least as related to the willingness to consider more diverse information and give more weight to evidence that challenges one’s prior opinion, as they are to the propensity to search for new evidence (see Appendix). So, while not being excessively focused on one’s prior belief leads actively open-minded individuals to acquire more evidence prior to forming an informed opinion, high and low AOT individuals may also differ in how they process information. To test this proposition, we kept the amount of information constant. Under these conditions, any difference in performance could be attributed only to more effective information processing. If, on the other hand, performance will not correlate with AOT when information is constant, results would suggest that the relationship between AOT and performance is driven primarily by the search for information.

Two-hundred twenty U.S. based participants (100 females, Mean age = 31.85) completed an online survey, in exchange for $0.50 each. They completed the same tasks and questionnaires used in Study 1, except that they did not determine the amount of information they acquired prior to making their estimates. Rather, they were randomly assigned to three groups, varying in the amount of information they received. One group viewed the objects twice in each task before making an estimate. A second group viewed the objects five times (the median number of times participants viewed the objects in Study 1). The third group viewed the objects eight times. We predicted that more information would lead to better estimates, but that, without the ability to control the amount of information acquired, AOT would not predict performance.

Table 3: Performance measures by amount of information participants received prior to estimating in Experiment 2. Standard deviations are presented in parentheses.

Participants correctly estimated the most frequent object type 2.71 times (SD = 0.98), on average, out of four tasks, achieving a 67.73% success rate. They displayed underconfidence, by reporting 63.04% confidence, on average, in the accuracy of their choices in each task, t(219) = −2.73, p = .007, d = 0.19. Participants’ 80% confidence intervals for the total number of objects included the actual number 1.58 times (SD = 1.06) on average, achieving a success rate of 39.50%. This performance level was significantly lower than the assigned 80% confidence level to each confidence interval, t(219) = 22.67, p < .001, d = 1.53, implying overconfidence.

Table 3 shows the results of the different information conditions. More information was related to more accurate choices of the most frequent object type, r = .169, p = .01 and higher average confidence in each choice, r = .358, p < .001, although these relationships were not observed in confidence interval estimates.2 The means provided in Table 2 suggest that viewing the objects 8 times did not improve participants’ performance relative to those who viewed the objects 5 times, which may be attributed to fatigue.

Did actively open-minded thinking predict estimate accuracy when participants could not control the amount of information? The answer is no. Multiple stepwise regression analyses reveal that, while the number of times the objects were presented to participants predicted performance on the choice tasks, B = 0.067, R2 = .029, F(1,218) = 6.45, p = .01, but not confidence intervals, B = −0.025, R2 = .003, F < 1, none of the cognitive styles or other individual attributes we measured predicted performance on either task, all Bs ≤ 0.112, all ts ≤ 1.69, all ps ≥ .1. Without the opportunity to conduct a more thorough search for information, neither AOT nor any other variable was related to performance.

These results are consistent with those of Study 1, in which participants could collect as much information as they wished prior to estimating. In Study 1, individuals with higher AOT gathered more information and performed better. In Study 2, participants were given a fixed amount of information and could not control the amount they deemed sufficient for making an estimate, and, here, AOT was not related to performance. This suggests that the better performance of high AOT individuals in Study 1 was not due to the use of information already obtained, but rather to their tendency to gather more information.

The failure of AOT to predict performance when information acquisition is held constant seems, at first glance, at odds with the definition of AOT as giving sufficient weight to new information or information that is inconsistent with prior beliefs. However, participants in Study 2 did not have a chance to form a prior belief before receiving the information; they knew nothing of the makeup of object types before the tasks, and so had no reference point for considering new information. Nevertheless, performance in Study 2 did not imply differences in the processing of information between individuals differing in AOT, suggesting that the performance differences observed in Study 1 were due to differences in information acquisition.

In this study, we sought to replicate our previous findings in a more naturalistic prediction setting. The added realism addresses three concerns about Studies 1 and 2. First, the tasks in these studies were unusual. We wanted to ensure that the effects of AOT and information acquisition also held in more realistic contexts. Therefore, we created a platform for predicting outcomes of sports games.

Second, in the first two studies, all pre-estimate information items were equally valid and helpful for accuracy. Real events, however, are less predictable. From warm, sunny days in the middle of winter to the fall of long-standing dictatorships, some events are not ones a wise gambler would bet on, but they may nevertheless occur. Studies 1 and 2 suggested that AOT predicted estimate accuracy when judges could collect valid information. In this study, we predicted that the relationship would hold only when the prior information available was positively correlated with the outcome. For example, when predicting the outcome of a football game, one may use the information about the teams’ record leading up to the game, and predict a win for the team with the better record (i.e., the favorite). If this team wins, then the prediction, which was consistent with the available information, was also accurate. However, if the team with the worse record (i.e., the underdog) ends up winning, then the prediction was inaccurate, although it was still consistent with the information available at the time. Therefore, we also measured predictions’ coherence, that is, the degree to which predictions were consistent with prior information (Dunwoody, 2009). Finally, in this study we introduced a monetary incentive for prediction accuracy.

Two-hundred U.S. participants (87 females, Mage = 32.36) completed an online survey, for a flat $0.50 fee plus a 1/50 chance to win a $10 prize. Additional $2 prizes were awarded to the ten participants with the most accurate predictions.

This experiment consisted of two parts. While the second part included the same battery of questionnaires used in the previous two studies, the first part was a new prediction task. Participants were asked to predict the outcomes of ten games that took place during one week of a National Football League season. To minimize unwanted effects of expertise, we chose a mid-season week in a past season, which was not revealed to participants.3

For each game, participants were told the names of the home team and the road team and predicted the winner. At the bottom of the screen, there were two buttons, one for “Information” and one for “Estimate”. Each time they clicked the “Information” button, participants received one of ten facts about one or both teams, in a random order. These facts included a team’s record (overall, home/away games, and division/conference/inter-conference games), a team’s recent performance (last game, five games, or current streak), a team’s offensive and defensive rankings, the outcome of the two teams’ last meeting, and injuries to significant players, if there were any. After each fact was presented, participants went back to the previous screen, where they could click on “Information” again to receive another fact, or on “Estimate” to advance to the prediction of the winner.

After making all ten predictions, participants reported their level of expertise in football (on a 1-9 scale, ranging from “I know nothing” to “Expert”) and proceeded to the battery of individual attribute questionnaires.

Table 4: Results from stepwise regression analyses predicting information acquisition, correct estimates, confidence and overconfidence in one’s estimates of games in which the better team won in Study 3. Unstandardized regression coefficients are reported, with standard errors in parentheses.

Confidence Overconfidence Model 1 Model 2 -0.980 2.623*** 66.441*** 32.915*** 39.83*** (0.97) (0.39) (2.04) (8.86) (9.36) 0.092** 0.034** -0.745** -0.652* (0.03) (0.01) (0.26) (0.26) 1.007* (0.43) -2.414* (1.13) .052** .043** .027* .041** .063** 0.022* 10.82 9.00 5.49 8.50 6.59 4.54 a The analyses included the four cognitive style variables, expertise, age, and level of education. Only variables included in any significant model are presented. * p < .05, ** p < .01, *** p < .001.

Participants made an average of 5.65 correct predictions out of 10 (SD = 1.36). They requested 2.11 facts (SD = 2.47), on average, before making each prediction. The average level of confidence in the accuracy of their predictions was 70.59% (SD = 11.29), implying overconfidence of 14.09%, t(199) = 11.62, p < .001, d = 0.82. Performance correlated positively with expertise, r = .182, p = .01, meaning that self-reported experts performed better than novices.

Four of the ten games resulted in upsets, meaning that the team with the inferior record leading up to the game beat the team with the better record.4 For these four games, information provided about teams’ past performance was harmful, rather than helpful. This difference was indeed evident in the data: predictions for the non-upset games were correct more frequently (63% of the time) than predictions for the upset games (46.75%, which was almost significantly worse than chance, t(199) = 1.73, p = .08). More importantly, pre-game information acquisition was related to better prediction accuracy of non-upset games, r = .311, p < .001, but to lower accuracy in games that yielded unexpected outcomes, r = −.504, p < .001. Therefore, we analyzed the two sets of games (upsets and non-upsets) separately.

For non-upset games, we conducted multiple stepwise regression analyses of information acquisition, performance, confidence and overconfidence, including the four cognitive styles and expertise. AOT was the only variable that predicted information acquisition and performance (see Table 4). All other variables were non-significant. As Figure 4 shows, the relationship between AOT and performance was partially mediated by persistence of information search. Similar to Study 1, high AOT participants acquired more information and made more correct predictions than low AOT individuals.

Figure 4: Results of mediation analysis for persistence in search for information in the relationship between AOT and accurate predictions in games won by the favorite in Study 3. Standardized coefficients are presented. * p < .05; ** p < .01; *** p < .001.

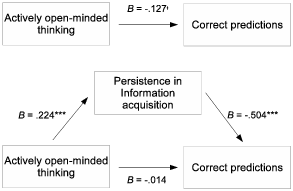

Games with upsets, where more information was related to worse performance, showed a different pattern. Multiple stepwise regression analyses reveal that, while AOT was again the only variable to predict persistence of information acquisition, it did not predict either performance or confidence. Correctly estimating these surprising outcomes was rather related to expertise (see Table 5). In fact, the relationship between AOT and performance in these games was negative and almost significant, B = −.127, t(199) = −1.80, p = .07. As Figure 5 shows, controlling for persistence in information acquisition eliminated this relationship.

Table 5: Results from stepwise regression analyses predicting information acquisition, correct estimates, confidence and overconfidence in estimates of games that resulted in upsets in Study 3. Unstandardized regression coefficients are reported, with standard errors in parentheses.

Confidence Model 1 Model 2 -1.071 1.446*** 65.072*** 72.562*** (0.98) (0.15) (1.43) (3.83) 0.091** (0.03) 0.102** 1.338*** 1.343*** (0.03) (0.30) (0.30) -0.090* (0.04) .050** .048** .090*** .110*** .020* 10.50 10.02 19.69 12.23 4.43 a The analyses included the four cognitive style variables, expertise, age, and level of education. Only those who were included in any significant model are presented. b The analysis revealed no significant predictors of overconfidence. * p < .05. ** p < .01, *** p < .001.

Figure 5: Results of mediation analysis for persistence in search for information in the relationship between AOT and accurate predictions in games won by the underdog in Study 3. * p < .05; ** p < .01; *** p < .001.

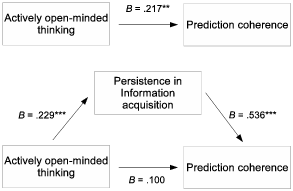

To test the hypothesis that AOT predicted the extent to which participants relied on the information they could acquire before making an estimate, we measured the coherence or consistency between the predicted outcome and the outcome implied more likely by the pre-estimate information. If higher AOT is linked to greater information acquisition and greater reliance on the information when making predictions, then higher AOT individuals should display greater consistency with prior information than lower AOT individuals. Our results support this prediction. A multiple stepwise regression analysis reveals that AOT was the only significant predictor of consistency, B = .217, R2 = .047, t(199) = 3.13, p = .002. As Figure 6 shows, this relationship was fully-mediated by the number of pre-game facts acquired, suggesting that the degree with which estimates followed pre-estimate information depended on the amount of information acquired.

Figure 6: Results of mediation analysis for persistence in search for information in the relationship between AOT and prediction coherence in Study 3. Standardized coefficients are presented. ** p < .01; *** p < .001.

To summarize, this study replicated the findings of Study 1 in a naturalistic prediction setting. Actively open-minded thinking was related to information acquisition and greater prediction coherence, or consistency between the prediction and the information acquired. When the outcome was consistent with the acquired information, AOT led to greater accuracy, but when the outcome was an upset (i.e., inconsistent with the information), higher AOT was associated with greater coherence and worse performance. High AOT individuals collected more information and used it when making their predictions. However, with invalid information, this strategy backfired.

Estimations of present outcomes and predictions about future outcomes can be difficult, if not impossible, tasks. Prior research has produced evidence that people insufficiently search for relevant information before making estimates. But as yet, there is no cure. We investigated variables that predicted differences in the tendency to be persistent in information search. Contenders included actively open-minded thinking (AOT), need for cognition, grit, and maximizing. In three studies, we tested these variables’ relationship with persistence in information acquisition, and whether they predict estimate accuracy and calibration. We used two different methods of estimating—item-confidence and confidence intervals—which have been shown to differ in the degree of accuracy and overconfidence they produce (Juslin, Wennerholm, & Olsson, 1999).

The only variable related to information acquisition and performance was AOT. This variable predicted accuracy in both categorical and quantitative estimates. Higher AOT was related to higher persistence in search for information, higher accuracy of estimates and lower overconfidence (though not via reduced confidence). Persistence of information acquisition mediated the relationship between AOT and performance. In Studies 1 and 3, high AOT individuals acquired more information, which in turn resulted in better performance when information was helpful for producing accurate estimates. In Study 2, when the amount of available information was kept constant, AOT had no effect on performance.

Study 3 introduced the concept of prediction coherence, or the degree to which predictions were consistent with available information. For highly-probable outcomes, more coherent predictions were also more accurate. However, for an improbable outcome, in our case an inferior team beating a superior team in an NFL game, coherence was related to lower accuracy. AOT was associated with greater coherence; higher AOT individuals performed better when games were not upsets and worse in games that resulted in upsets. When information was misleading, high AOT individuals were more susceptible to invalid information. In an uncertain world, event outcomes do not always fully match prior information about them. But as long as the information is at least somewhat predictive, coherence should have a positive relationship with accuracy, and AOT should be helpful in making accurate estimates and predictions.

The mediating role of information acquisition in the relationship between AOT and estimation performance can potentially explain the effects of AOT found in prior research. For example, AOT’s role in reducing belief bias (Sa et al., 1999) may be related to high AOT individuals’ propensity to search for more available information, whether in their environment or in memory, before answering. AOT might also have an influence on other problems in judgment and decision making. The positive effect of information acquisition on confidence interval hit-rate we found is consistent with the findings of Haran et al. (2010), whose Subjective Probability Interval Estimate (SPIES) method improved calibration of confidence intervals by preventing judges from ignoring alternative outcomes. It is possible that these results were achieved by making all participants behave as high AOT individuals are naturally inclined to, and make a conscious effort to obtain more relevant information during the estimation process.

Actively open-minded thinkers’ inclination to search and consider new information might also be observed in choice settings. Iyengar and Lepper (2000) found that large choice sets have a negative impact on consumer satisfaction. AOT might play a role in this effect. One possibility is that, by considering more information about choice attributes, high AOT individuals might experience a more severe choice overload than low AOT individuals. Another possibility is that high AOT individuals are less prone to choice overload, and that they demonstrate this by not being reluctant to collect more information in their evaluation of the choice set.

The objective of this research was to test whether cognitive styles predict persistence in information search and estimation performance. AOT was found to predict both. Other aspects of the construct, not related to information acquisition, should be investigated. These include the willingness to spend time on problems and to weigh information that contradicts one’s prior beliefs. Although these aspects of AOT were not central to our research question, they may demonstrate additional ways by which AOT affects predictions and decisions, such as enhancing Bayesian updating upon receiving information that is inconsistent with an initial belief.

Future research should also explore whether AOT affects retrieval of evidence from memory as it does acquiring new information. Research sometimes treats available (though not yet acquired) information and known information as the same. For example, accounts of some forms of “confirmation bias” describe ignorance, or underweighting, of information that contradicts a prior belief, similarly when this information is already known to the judge as when it is provided by an external source as new evidence. However, not looking for new evidence can be seen as an act of omission, whereas discounting known information might be a more deliberate act. Investigating the role of AOT in reducing bias in the processing of these two types of information can shed light on possible differences between these two processes.

Another influential factor (which we did not examine) in forecasting is expertise. We elicited self-ratings of expertise in American football in Study 3, but the specific items we used for forecasting, i.e., games from an unknown past season, were unrelated to actual prior knowledge. Future research should test interactions between AOT and domain expertise in predicting estimation performance.

Baron (1993, 1994) has advocated the teaching of adaptive cognitive thinking styles, including AOT. Baron et al. (1986) conducted an 8-month course of decision making, consisting of hypothetical examples, practice exercises and feedback, aimed at instilling a consistent reduction in students’ susceptibility to bias. Perkins, Bushey, and Faraday (1986) conducted a similar course, in which they taught students to search for arguments on both sides of an issue and consider all relevant arguments. Both training programs improved thinking skills and processes. Our studies demonstrate a positive relationship between AOT and better forecasting. If these interventions can cause changes in forecasting skills, they should be used to train forecasters. Our search tasks could be used to assess such improvement.

This work builds on previous research on individual differences in prediction aptitude. Actively open-minded thinking (AOT) predicted persistence in information acquisition as well as accuracy and calibration of estimates. High AOT individuals invested more effort in acquiring information, which, in turn, improved the quality of their estimates. To the degree that this skill can be taught, it should be used to improve forecasting.

Barber, B. M., & Odean, T. (2001). Boys will be boys: Gender, overconfidence, and common stock investment. The Quarterly Journal of Economics, 116, 261–292. http://dx.doi.org/10.1162/003355301556400

Baron, J. (1993). Why teach thinking? An essay. Applied Psychology: An International Review, 42, 191–214.

Baron, J. (1994). The teaching of thinking. Thinking and Deciding (2nd ed., pp. 127–148). New York: Cambridge University Press.

Baron, J., Badgio, P. C., & Gaskins, I. W. (1986). Cognitive style and its improvement: A normative approach. In R. J. Sternberg (Ed.), Advances in the Psychology of Human Intelligence (pp. 173–220). London: Lawrence Erlbaum Associates.

Baron, J., Badgio, P. C., & Ritov, Y. (1991). Departures from optimal stopping in an anagram task. Journal of Mathematical Psychology, 35, 41–63.

Baron, R. M., & Kenny, D. A. (1986). The moderator-mediator variable distinction in social psychological research: Conceptual, strategic, and statistical considerations. Journal of Personality and Social Psychology, 51, 1173–1182.

Ben-Naim, E., Vazquez, F., & Redner, S. (2006). Parity and predictability of competitions. Journal of Quantitative Analysis in Sports, 2, 1–6. http://dx.doi.org/10.2202/1559-0410.1034

Blais, A. R., Thompson, M. M., & Baranski, J. V. (2005). Individual differences in decision processing and confidence judgments in comparative judgment tasks: The role of cognitive styles. Personality and Individual Differences, 38, 1701–1713. http://dx.doi.org/10.1016/j.paid.2004.11.004

Browne, G. J., & Pitts, M. G. (2004). Stopping rule use during information search in design problems. Organizational Behavior and Human Decision Processes, 95, 208–224. http://dx.doi.org/10.1016/j.obhdp.2004.05.001

Buehler, R., & Griffin, D. (2003). Planning, personality, and prediction: The role of future focus in optimistic time predictions. Organizational Behavior and Human Decision Processes, 92(1-2), 80–90. http://dx.doi.org/10.1016/S0749-5978(03)00089-X

Cacioppo, J. T., & Petty, R. E. (1982). The need for cognition. Journal of Personality and Social Psychology, 42, 116–131. http://dx.doi.org/10.1037/0022-3514.42.1.116

Cacioppo, J. T., Petty, R. E., & Kao, C. F. (1984). The efficient assessment of need for cognition. Journal of Personality Assessment, 48, 306–307.

Campbell, W. K., Goodie, A. S., & Foster, J. D. (2004). Narcissism, confidence, and risk attitude. Journal of Behavioral Decision Making, 17, 297–311. http://dx.doi.org/10.1002/bdm.475

Cohen, A. R. (1957). Need for cognition and order of communication as determinants of opinion change. In C. I. Hovland (Ed.), The Order of Presentation in Persuasion (pp. 79–97). New Haven: Yale University Press.

Cutler, B. L., & Wolfe, R. N. (1989). Self-monitoring and the association between confidence and accuracy. Journal of Research in Personality, 23, 410–420. http://dx.doi.org/10.1016/0092-6566(89)90011-1

Dijksterhuis, A., Bos, M. W., Nordgren, L. F., & Van Baaren, R. B. (2006). On making the right choice: The deliberation-without-attention effect. Science, 311, 1005–1007. http://dx.doi.org/10.1126/science.1121629

Duckworth, A. L., Peterson, C., Matthews, M. D., & Kelly, D. R. (2007). Grit: Perseverance and passion for long-term goals. Journal of Personality and Social Psychology, 92, 1087–1101. http://dx.doi.org/10.1037/0022-3514.92.6.1087

Duckworth, A. L., & Quinn, P. D. (2009). Development and validation of the short grit scale (grit-s). Journal of Personality Assessment, 91, 166–74. http://dx.doi.org/10.1080/00223890802634290

Dunwoody, P. T. (2009). Introduction to the special issue: Coherence and correspondence in judgment and decision making. Judgment and Decision Making, 4, 113–115.

Erev, I., Wallsten, T. S., & Budescu, D. V. (1994). Simultaneous over-and underconfidence: The role of error in judgment processes. Psychological Review, 101, 519–527.

Fischhoff, B., & Bar Hillel, M. (1984). Focusing techniques: A shortcut to improving probability judgments? Organizational Behavior and Human Performance, 34, 175–194. http://dx.doi.org/10.1016/0030-5073(84)90002-3

Frederick, S. (2005). Cognitive reflection and decision making. The Journal of Economic Perspectives, 19, 25–42.

Gigerenzer, G., & Gaissmaier, W. (2011). Heuristic decision making. Annual Review of Psychology, 62, 451–82. http://dx.doi.org/10.1146/annurev-psych-120709-145346

Gigerenzer, G., Hoffrage, U., & Kleinbölting, H. (1991). Probabilistic mental models: A Brunswikian theory of confidence. Psychological Review, 98, 506–528.

Gosling, S. D., Rentfrow, P. J., & Swann, W. B. (2003). A very brief measure of the Big-Five personality domains. Journal of Research in Personality, 37, 504–528. http://dx.doi.org/10.1016/S0092-6566(03)00046-1

Haran, U., Moore, D. A., & Morewedge, C. K. (2010). A simple remedy for overprecision in judgment. Judgment and Decision Making, 5, 467–476.

Harvey, N. (1997). Confidence in judgment. Trends in Cognitive Sciences, 1, 78–82.

Henrion, M., & Fischhoff, B. (1986). Assessing uncertainty in physical constants. American Journal of Physics, 54, 791–798. http://dx.doi.org/10.1119/1.14447

Hertwig, R., & Pleskac, T. J. (2010). Decisions from experience: Why small samples? Cognition, 115, 225–237. http://dx.doi.org/10.1016/j.cognition.2009.12.009

Hirt, E. R., & Markman, K. D. (1995). Multiple explanation: A consider-an-alternative strategy for debiasing judgments. Journal of Personality and Social Psychology, 69, 1069–1086. http://dx.doi.org/10.1037/0022-3514.69.6.1069

Hoch, S. J. (1985). Counterfactual reasoning and accuracy in predicting personal events. Journal of Experimental Psychology: Learning, Memory, and Cognition, 11, 719. http://dx.doi.org/10.1037//0278-7393.11.1-4.719

Howarth, E., & Eysenck, H. J. (1968). Extraversion, arousal, and paired-associate recall. Journal of Experimental Research in Personality, 3, 114–116.

Iyengar, S. S., & Lepper, M. R. (2000). When choice is demotivating: can one desire too much of a good thing? Journal of Personality and Social Psychology, 79, 995–1006.

Jain, K., Bearden, N. J., & Filipowicz, A. (2013). Do maximizers predict better than satisficers? Journal of Behavioral Decision Making, 26, 41–50. http://dx.doi.org/10.1002/bdm.763

Juslin, P., & Olsson, H. (1997). Thurstonian and Brunswikian origins of uncertainty in judgment: A sampling model of confidence in sensory discrimination. Psychological Review, 104, 344–366.

Juslin, P., Wennerholm, P., & Olsson, H. (1999). Format dependence in subjective probability calibration. Journal of Experimental Psychology: Learning, Memory, and Cognition, 25, 1038–1052. http://dx.doi.org/10.1037/0278-7393.25.4.1038

Juslin, P., Winman, A., & Hansson, P. (2007). The naive intuitive statistician: A naïve sampling model of intuitive confidence intervals. Psychological Review, 114, 678–703. http://dx.doi.org/10.1037/0033-295X.114.3.678

Kagan, J. (1965). Reflection-impulsivity and reading ability in primary grade children. Child Development, 36, 609–628.

Kardash, C. M., & Scholes, R. J. (1996). Effects of preexisting beliefs, epistemological beliefs, and need for cognition on interpretation of controversial issues. Journal of Educational Psychology, 88, 260–271.

Klayman, J., Soll, J. B., González-Vallejo, C., & Barlas, S. (1999). Overconfidence: It depends on how, what, and whom you ask. Organizational Behavior and Human Decision Processes, 79, 216–247. http://dx.doi.org/10.1006/obhd.1999.2847

Koehler, D. J., Brenner, L., & Griffin, D. (2002). The calibration of expert judgment: Heuristics and biases beyond the laboratory. In T. Gilovich, D. Griffin, & D. Kahneman (Eds.), Heuristics and Biases: The Psychology of Intuitive Judgment (pp. 686–715). New York: Cambridge University Press.

Koriat, A., Lichtenstein, S., & Fischhoff, B. (1980). Reasons for confidence. Journal of Experimental Psychology: Human Learning and Memory, 6, 107–118. http://dx.doi.org/10.1037/0278-7393.6.2.107

Krantz, J. H., & Dalal, R. S. (2000). Validity of web-based psychological research. In M. H. Birnbaum (Ed.), Psychological Experiments on the Internet (pp. 35–60). San Diego: Academic Press.

Lynn, R. (1961). Introversion-extraversion differences in judgments of time. Journal of Abnormal and Social Psychology, 63, 457–458.

Macpherson, R., & Stanovich, K. E. (2007). Cognitive ability, thinking dispositions, and instructional set as predictors of critical thinking. Learning and Individual Differences, 17, 115–127. http://dx.doi.org/10.1016/j.lindif.2007.05.003

McElroy, T., & Dowd, K. (2007). Susceptibility to anchoring effects: How openness-to-experience influences responses to anchoring cues. Judgment and Decision Making, 2, 48–53.

McElroy, T., & Seta, J. J. (2003). Framing effects: An analytic–holistic perspective. Journal of Experimental Social Psychology, 39, 610–617. http://dx.doi.org/10.1016/S0022-1031(03)00036-2

McKenzie, C. R. M. (1997). Underweighting alternatives and overconfidence. Organizational Behavior and Human Decision Processes, 71, 141–160. http://dx.doi.org/10.1006/obhd.1997.2716

McKenzie, C. R. M. (1998). Taking into account the strength of an alternative hypothesis. Journal of Experimental Psychology: Learning, Memory, and Cognition, 24, 771–792. http://dx.doi.org/10.1037/0278-7393.24.3.771

McKenzie, C. R. M., Liersch, M. J., & Yaniv, I. (2008). Overconfidence in interval estimates: What does expertise buy you? Organizational Behavior and Human Decision Processes, 107, 179–191. http://dx.doi.org/10.1016/j.obhdp.2008.02.007

Messer, S. (1970). Reflection-impulsivity: Stability and school failure. Journal of Educational Psychology, 61, 487–490.

Moore, D. A., & Healy, P. J. (2008). The trouble with overconfidence. Psychological Review, 115, 502–517. http://dx.doi.org/10.1037/0033-295X.115.2.502

Morgan, M. G., & Keith, D. W. (2008). Improving the way we think about projecting future energy use and emissions of carbon dioxide. Climatic Change, 90, 189–215. http://dx.doi.org/10.1007/s10584-008-9458-1

Nenkov, G. Y., Morrin, M., Ward, A., Hulland, J., & Schwartz, B. (2008). A short form of the Maximization Scale: Factor structure, reliability and validity studies. Judgment and Decision Making, 3, 371–388.

Osborne, J. W. (1972). Short- and long-term memory as a function of individual differences in arousal. Perceptual and Motor Skills, 34, 587–593.

Pallier, G., Wilkinson, R., Danthiir, V., Kleitman, S., Knezevic, G., Stankov, L., & Roberts, R. D. (2002). The role of individual differences in the accuracy of confidence judgments. The Journal of General Psychology, 129, 257–299. http://dx.doi.org/10.1080/00221300209602099

Paolacci, G., Chandler, J., & Ipeirotis, P. G. (2010). Running experiments on Amazon Mechanical Turk. Judgment and Decision Making, 5, 411–419.

Parker, A. M., Bruine de Bruin, W., & Fischhoff, B. (2007). Maximizers versus satisficers: Decision-making styles, competence, and outcomes. Judgment and Decision Making, 2, 342–350.

Perkins, D., Bushey, B., & Faraday, M. (1986). Learning to reason. Final report, Grant No. NIE-G-83-0028, Project No030717. Harvard Graduate School of Education.

Sa, W. C., West, R. F., & Stanovich, K. E. (1999). The domain specificity and generality of belief bias: Searching for a generalizable critical thinking skill. Journal of Educational Psychology, 91, 497–510.

Schaefer, P. S., Williams, C. C., Goodie, A. S., & Campbell, W. K. (2004). Overconfidence and the Big Five. Journal of Research in Personality, 38, 473–480. http://dx.doi.org/10.1016/j.jrp.2003.09.010

Simon, H. A. (1978). Rationality as process and as product of thought. The American Economic Review, 68, 1–16.

Soll, J. B. (1996). Determinants of overconfidence and miscalibration: The roles of random error and ecological structure. Organizational Behavior and Human Decision Processes, 65, 117–137.

Soll, J. B., & Klayman, J. (2004). Overconfidence in interval estimates. Journal of Experimental Psychology: Learning, Memory, and Cognition, 30, 299–314. http://dx.doi.org/10.1037/0278-7393.30.2.299

Speirs-Bridge, A., Fidler, F., McBride, M., Flander, L., Cumming, G., & Burgman, M. (2010). Reducing overconfidence in the interval judgments of experts. Risk Analysis, 30, 512–523. http://dx.doi.org/10.1111/j.1539-6924.2009.01337.x

Stanovich, K. E., & West, R. F. (1998). Individual differences in rational thought. Journal of Experimental Psychology: General, 127, 161–188. http://dx.doi.org/10.1037//0096-3445.127.2.161

Stanovich, K. E., & West, R. F. (2007). Natural myside bias is independent of cognitive ability. Thinking & Reasoning, 13, 225–247. http://dx.doi.org/10.1080/13546780600780796

Taylor, D., & McFatter, R. (2003). Cognitive performance after sleep deprivation: Does personality make a difference? Personality and Individual Differences, 34, 1179–1193.

Van Rijsoort, S., Emmelkamp, P., & Vervaeke, G. (1999). The Penn State Worry Questionnaire and the Worry Domains Questionnaire: Structure , reliability and validity. Clinical Psychology and Psychotherapy, 6, 297–307.

Weiss Barstis, S., & Ford, L. H. J. (1977). Reflection-impulsivity, conservation, and the development of ability to control cognitive tempo. Child Development, 48, 953–959.

Wolfe, R. N., & Grosch, J. W. (1990). Personality correlates of confidence in one’s decisions. Journal of Personality, 58, 515–534. http://dx.doi.org/10.1111/j.1467-6494.1990.tb00241.x

Please rate your agreement or disagreement with each statement on a 1 to 7 scale, where 1 = Completely Disagree, 4 = Neutral, and 7 = Completely Agree. (LAST 4 should be reverse coded).

1. Allowing oneself to be convinced by an opposing argument is a sign of good character.

2. People should take into consideration evidence that goes against their beliefs.

3. People should revise their beliefs in response to new information or evidence.

4. Changing your mind is a sign of weakness.

5. Intuition is the best guide in making decisions.

6. It is important to persevere in your beliefs even when evidence is brought to bear against them.

7. One should disregard evidence that conflicts with one’s established beliefs.

This document was translated from LATEX by HEVEA.