Keywords: explanation, alternative consideration, scenario planning, debiasing.

|

| Variable | N | Mean | S.D. | Con 1 | Con 2 | WTA | Expl. 1 | Expl. 2 | Question | Total | Expl. 1 |

| Detail | Detail | Breadth | Questions | Quality | |||||||

| Con 1 | 135 | 8.41 | 2.75 | -- | |||||||

| Con 2 | 135 | 7.44 | 2.81 | .40* | -- | ||||||

| WTA | 204 | 25.60 | 8.89 | .42* | .24* | -- | |||||

| Expl. 1 Detail | 204 | 1.48 | .79 | -.07 | -.05 | -.00 | -- | ||||

| Expl. 2 Detail | 204 | .87 | .80 | -.12 | -.05 | -.23* | .29* | -- | |||

| Question Breadth | 204 | 1.77 | .85 | -.11 | -.11 | -.16* | .30* | .37* | -- | ||

| Total Questions | 204 | 2.73 | 1.39 | -.19* | -.11 | -.26* | .38* | .28* | .64* | -- | |

| Expl. 1 Quality | 204 | 2.07 | 1.23 | -.09 | .03 | -.08 | .70* | .33* | .31* | .34* | -- |

| Expl. 2 Quality | 204 | 1.26 | 1.27 | -.13 | -.01 | -.22* | .23* | .85* | .36* | .27* | .40* |

| Variable | N | Mean | S.D. | Con 1 | Con 2 | WTA | Expl. 1 | Expl. 2 | Question | Total | Expl. 1 |

| Detail | Detail | Breadth | Questions | Quality | |||||||

| Con 1 | 161 | 8.89 | 2.11 | -- | |||||||

| Con 2 | 161 | 7.95 | 2.35 | .24* | -- | ||||||

| WTA | 218 | 24.58 | 8.02 | .16* | .25* | -- | |||||

| Expl. 1 Detail | 219 | 1.58 | .78 | .09 | -.03 | .03 | -- | ||||

| Expl. 2 Detail | 219 | 1.12 | .90 | .10 | .06 | -.12 | .29* | -- | |||

| Question Breadth | 219 | 1.90 | .83 | -.02 | .04 | -.19* | .16* | .28* | -- | ||

| Total Questions | 219 | 3.17 | 1.74 | .04 | -.02 | -.20* | .23* | .16* | .65* | -- | |

| Expl. 1 Quality | 218 | 2.6 | 1.24 | .07 | .10 | .05 | .64* | .30* | .17* | .24* | -- |

| Expl. 2 Quality | 218 | 2.0 | 1.54 | .07 | .10 | -.11 | .17* | .82* | .29* | .13 | .36* |

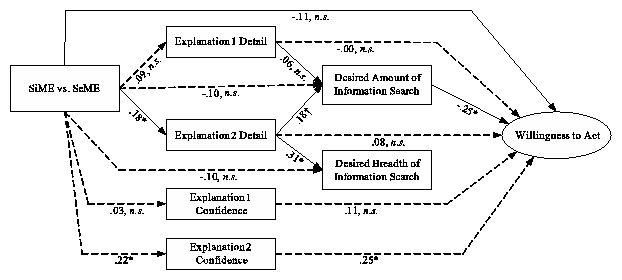

| Relationship | Effect | Study 1 | Study 2 |

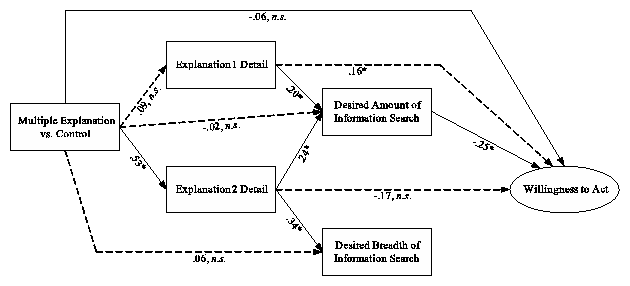

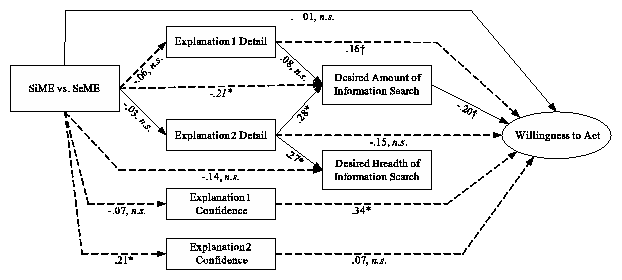

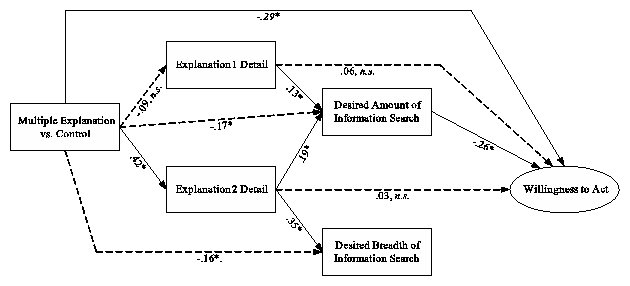

| Multiple Expl. Þ WTA | Total | (-.31, -.04)* | (-.39, -.12)* |

| Direct | (-.23, .10) n.s. | (-.42, -.14)* | |

| Indirect | (-.22, .02) n.s. | (-.05, .11) n.s. | |

| Multiple Expl. Þ Desired Breadth of Information Search | Total | (.11, .36)* | (-.13, .11) n.s. |

| Direct | (-.08, .19) n.s. | (-.29, -.02)* | |

| Indirect | (.10, .27)* | (.09, .22)* | |

| Multiple Expl. Þ Desired Amount of Information Search | Total | (-.03, .27) n.s. | (-.23, .04) n.s. |

| Direct | (-.18, .13) n.s. | (-.30, -.03)* | |

| Indirect | (.05, .26)* | (-.02, .14) n.s. | |